Moon Smart Focus Uses AI to Track Your Meatbags

Machine learning comes to high-end cinema autofocus from Moon Smart Focus.

We've known that machine learning and AI are going to change our jobs for a while. Now we have yet another example of how change is coming from a Swedish startup with the Moon Smart Focus, a focus assist tool designed for high-end cinema production that uses a depth-sensing camera and a machine learning algorithm to identify subjects in the frame and track their distance. Something similar was just released with the new Sony A7R.

Built by a small team, with a group of dedicated machine learning devs continually working to refine the project, it's already rolling out on some of the most exciting productions of the year.

Focus with AI

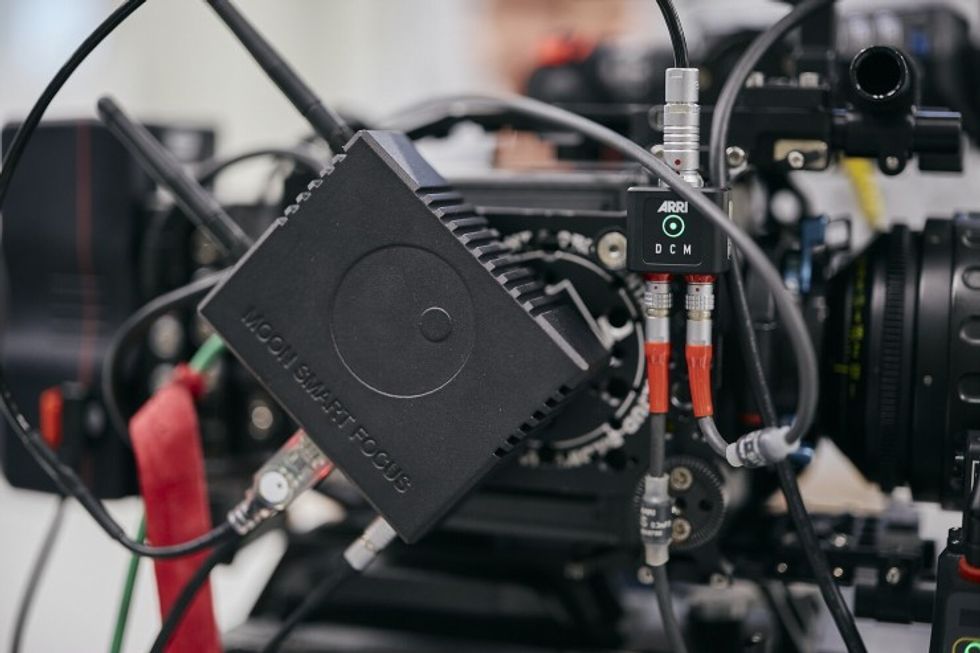

The Moon Smart Focus is built around two items, a "brain" that processes data and runs the model, and a sensor that you mount on the camera, which senses the depth of all the subjects in front of the camera. The depth camera is just a commercially available Intel Realsense unit. This is great for keeping things more affordable and flexible.

If Intel launches a new Realsense camera in a few years with more features, better resolution, etc., there is a good bet that Moon Smart Focus will support it, and you'll be able to keep upgrading your system with a better sensor without too major an investment in equipment.

The other half of that system is the interesting part—the brain that runs the software to process all that depth data and turn it into something ACs can use.

You can attach to that brain over WiFi or Ethernet from an iPad or an iPhone, and then on your iOS device, you'll see a screen giving you a preview of everything in frame and how far the subjects are from the camera. If you've got five people out there, it will tell you how far everyone is out.

From there, it's a simple process to focus around to them as you need to, having all that data right at your fingertips.

The Bits and Bytes of It All

This is all done with a machine learning model that takes all that depth data from the Realsense camera and processes it into usable info. It recognizes people, something that machine learning has gotten very good at, and then correlates it to the depth info to give a realistic sense of how far those people are from the camera.

Right now, the Moon Smart Focus brain does all of this anonymously. It doesn't build a profile for any of the actors or track their movements as people to protect their privacy. To the system, they are all just "meatbags," but it can tell you their precise location for focus.

One of the most fascinating features of the Moon Smart Focus team has been their willingness to evolve the system to fit customer needs. They are launching with a small production run and want to build the tools into the software that customers need for their projects. That depends on a lot of customer feedback, bringing them real-world requests for what they wish the software could do. That also could include building custom machine learning models.

For instance, currently, the unit is amazing at seeing a person. But what if you are working on a movie with a werewolf?

If you can, get test images of your creature creation to the Moon Smart Focus team (ideally during pre-production testing), and they will be able to build a conceptual model of that creature so that the autofocus can track them like any of their other "meatbags" so you can get your real-time focus data.

The Next Step

Moon Smart Focus takes this all a step further by integrating with your existing follow focus systems from Preston, ARRI, or C-motion. By plugging directly into those units, you have the option of autofocus if you want it, though the Moon team says that they have seen relatively little use of the system for autofocus, mostly in product work where a predictably moving camera and a stable subject make the autofocus particularly effective. Most users appear to just really appreciate having real-time data for the position of every actor in the scene live so they can make focus decisions with that data.

The Moon Smart Focus unit is currently $24,000, so it will be a purchase mostly for the super high-end first ACs and for rental houses. But it's good to keep current on what is happening at the high end because it is starting to trickle down to independent productions faster and faster all the time.

Check out the Moon Smart Focus site for more.

What do you think of this new tech? Would you want this on your production? Let us know in the comments!

Check out weekly specials, deals, and rebates: Pro Video Gear, Pro Audio Gear, Lighting

No Film School's coverage of

No Film School's coverage of