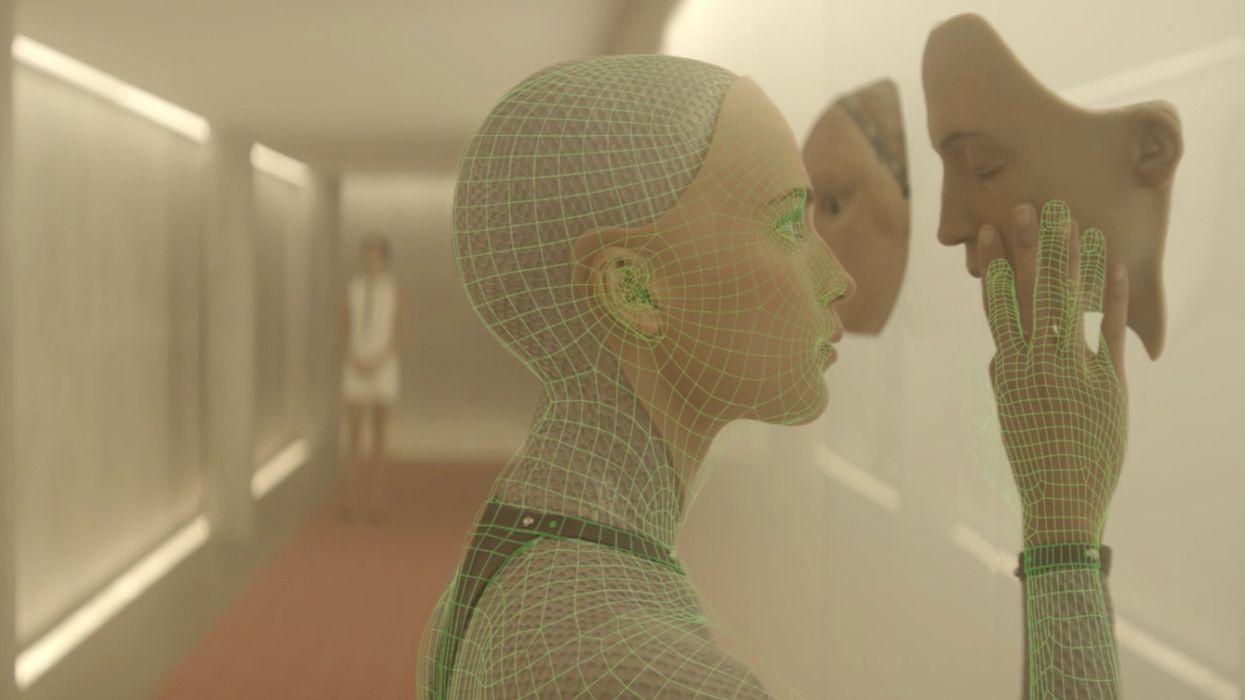

Ex Machina may not have had a huge budget by Hollywood standards, but thanks to VFX house Double Negative (also Milk VFX and Utopia), it certainly had some of the most impressive effects in a film last year. Bringing the Ava robot to life took a terrific Golden Globe-nominated performance from Alicia Vikander, but it was the intricate VFX that sealed the deal, as there were CG rendered parts that were always moving internally, replicating how they might look if they were built for real.

Here's a VFX breakdown courtesy of fxguide:

Featurette talking about the creation of Ava:

And a portion of the interview here with VFX supervisor Andrew Whitehurst, with a longer feature article here:

fxg: What would you say was it that made the visual effects for Ava so convincing?

Whitehurst: I think that because VFX were part of the whole production process from very early on meant we were able to work with all the other departments on the show to get what we required to do our job to the best of our abilities. From the make-up, costume to lighting and camera we ended up with a full range of HDR lighting for every set up, a costume that had appropriate tracking markers built into it, and a costume was constructed to minimize seams in areas that would remain in-camera (the shoulders for example). Team work and deep collaboration were key. If I as a supervisor can't give the VFX artists the best material to work with, you can't expect to get great VFX out of them.

While the JDC Xtal Express anamorphic lenses used for the film gave a lot of character, they also affected the VFX work, since the odd distortions needed to be worked into the CG components of Ava to sell the effect. Director Alex Garland wanted to shoot the film without being concerned about where they needed to place the camera, and that meant the VFX team had a lot of work ahead of them (including painting out a lot of reflections from the crew):

...although significant digital work would be carried out by the visual effects team, motion capture was not an option. The actors were always in the plates. For each take, then, a clean plate was acquired, and later extensive body tracking - including for shots up to 1600 frames - would be necessary.

The mechanical parts and ribbons were rendered via DNeg’s physically plausible rendering pipeline (which uses RenderMan). “Physically plausible gives you that photographic quality that you can’t get any other way,” says Whitehurst.

That's likely the reason why all of the departments worked so closely before the start of the film, so that they could figure out the best way to create a robot that had just enough real practical elements to help them in post, and at the same time, help the audience believe what they were seeing.

If you missed it, here's a fantastic talk with the DP of the film, Rob Hardy, speaking about the challenges of shooting and lighting the film, which was captured on the Sony F65:

Be sure to check out the longer fxguide article for all of the details on the VFX work.

Source: fxguide