540 Terabytes of Data: 4 Takeaways from the Groundbreaking 'Billy Lynn's Long Halftime Walk' Post Team

What does it take to complete post on a film shot in 4K, 120fps, and stereo 3D?

Everyone who signed on to work on the new Ang Lee project Billy Lynn's Long Halftime Walkknew they would get to work a project at the bleeding edge of film technology. But as David Bowie once said, “The first one to the bleeding edge gets cut.”

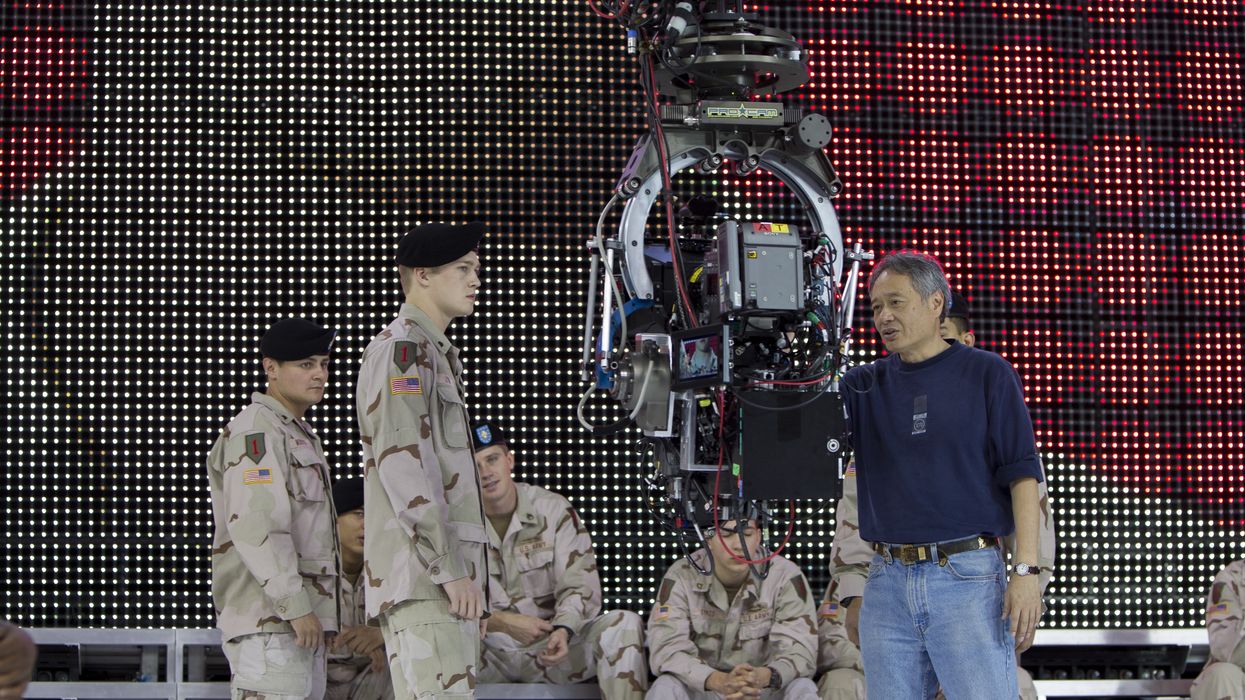

Lee’s vision for the film involved shooting in a format that no one else had shot before: 4K, 120fps, stereo 3D. This created a final delivery that they referred to as “the whole shebang,” more than 540TB of dailies, and a final delivery file that was 84TB. To deliver on that vision, he assembled a technical and creative team to build a brand new workflow from scratch. The team re-assembled to share what they learned at the HBO offices in Manhattan last week, in an event arranged by the Blue Collar Post Collective.

“We would go to all the big vendors, and explain what we needed and the format we were working in, and most would pass. In the end the only way to do it was internally.”

On stage were assistant editor Andrew Leven, dailies technician Derek Schweickart, colorist Marcy Robinson, technical supervisor Ben Gervais, VFX artists Alex Lemke and Michael Huber, and VFX producer Leslie Hough. The talk was moderated by Shahir Daud from The Only Podcast About Movies.

Here are four of the big takeaways that the post team had to share to keep in mind for future projects where you might be working in a brand new format and developing a workflow as you go.

1. Sometimes new technology feels like old technology

One of the first things technical supervisor Ben Gervais remarked on was working with the stereo rig. “With a native 800 ASA camera, shooting 120fps 360° shutter through a 50/50 split mirror, you end up with functionally 160 ASA sensitivity, so it was like shooting a '70s movie in 2016.” This required a larger amount of light on set than is typical on modern shoots. Combined with the tremendously heavy stereo rig and two Sony F65 cameras, the crew was simultaneously working with the most cutting edge of formats but light levels that haven’t been required in decades.

2. Building it yourself can save you money, even on a big studio movie

VFX producer Leslie Hough perfectly articulated a theme repeated by many people on stage when she discussed getting bids for particularly complicated shots. “We would go to all the big vendors, and explain what we needed and the format we were working in, and most would pass, while the rest would give us a bid so high it was basically a pass. In the end the only way to do it was internally.”

When doing particularly complicated workflows, sometimes it’s just cheaper to build the set up yourself and execute in house than it is to go to an outside vendor. This is at least partially due to the fact that the vendor is going to charge you for the time they need to learn how to deal with your format. Since you already understand its challenges, building elements, workflows, or equipment from scratch can save your production a lot of money.

Outsourcing many parts of the post workflow is increasingly common, but when inventing new processes or working with non-standard formats, having the team all together can be a real life saver. “We could literally just walk one office down and talk to VFX, or another office down and preview things in the Baselight, and immediate know if something we were working on was going to succeed,” said Schweickart.

Those are conversations that are much harder to have even with someone else in your same town over the telephone, and having to drive to another post house every day to preview shots would slow everything to a crawl.

The entire team worked out of Ang Lee’s office, and it was the only way to pull it off.

If you are moving to a new format, you’ll have to not just shoot principal in the format; everything is going to need to be in the new format.

4. VFX libraries aren’t ready for new formats

VFX artist Alex Lemke realized partway through how much he depended on previously built effects libraries. “Like any veteran of the industry, I have accumulated a library of elements over the years, bullet hits, flares, etc. that I could use at will. But they were all 24fps, and didn’t work at all in 120fps! We pushed for time shooting elements in production, but it was never possible, so we often had to create 3D elements from CGI that on another production we could just use a flat plate we had on our hard drive already.”

Anyone considering a new workflow should definitely keep this in mind. Between stock footage (often used in sky replacements), and other VFX elements, most modern projects are assembled out of a great variety of footage, and the vast majority of that is 2D, 24fps. If you are moving to a new format, you’ll have to not just shoot principal in the format; everything is going to need to be in the new format.

For indie projects without a CG team, it becomes even more important to find time to shoot plates, elements, etc. in your custom format, or to find a way to access footage from that format in post. If you are planning to do some drone establishing shots, you'll need to find a way to shoot those in your new format. Getting to "the whole shebang" requires making sure everything in "the whole shebang" was captured in "the whole shebang."

You can hear the whole event on the Only Podcast About Movies.