EXCLUSIVE: Watch Lytro Change Cinematography Forever

The Lytro Cinema Camera could be the most groundbreaking development in cinematography since color motion picture film. We go "in-depth" with the entire Lytro system in this exclusive video:

Since Lytro first teased their Cinema Camera earlier this month, articles have been written. Press conferences were held. Lytro's presentation at NAB 2016 was standing room only and hundreds were turned away. Several press outlets did write-ups of the demo; we’ve been writing about the technology concept for five years. But words don't do it justice: you have to see the new Lytro cinema system in action, including its applications in post-production, to understand just how much this technology changes cinematography forever.

On its own, it would be a supreme technical accomplishment to develop a 755 megapixel sensor that shoots at 300 frames per second with 16 stops of dynamic range (for reference, the latest ARRI and RED cinema cameras top out at 19 and 35 megapixels, respectively). But those outlandish traditional specifications might be the least interesting thing about the Lytro Cinema Camera. And that’s saying something, when developing the highest resolution video sensor in history isn’t the headline.

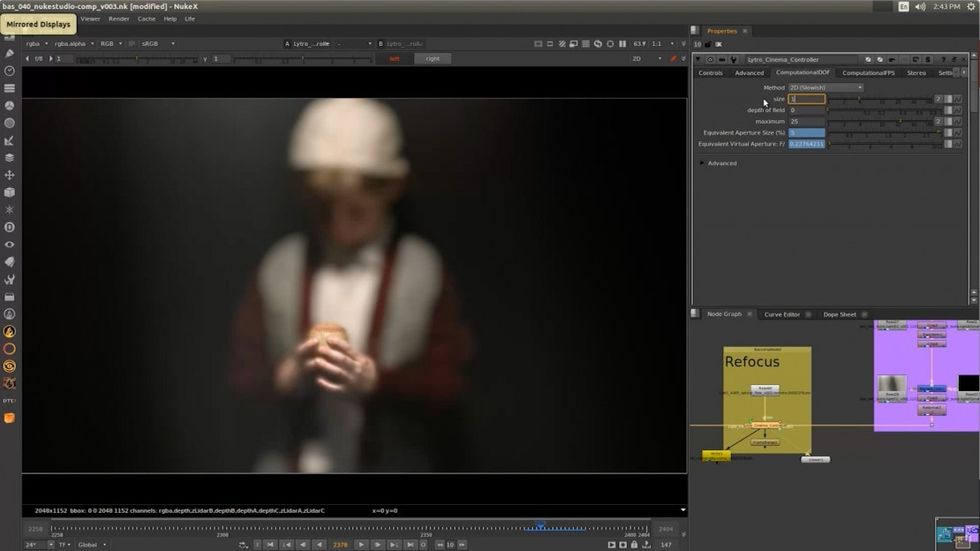

The headline, as Jon Karafin, Head of Light Field Video at Lytro, explains, is that Lytro captures "a digital holographic representation of what's in front of the camera." Cinematography traditionally "bakes in" decisions like shutter speed, frame rate, lens choice, and focal point. The image is "flattened." By capturing depth information, Lytro is essentially turning a live action scene into a pseudo-CGI scene, giving the cinematographer and director control over all of those elements after the fact.

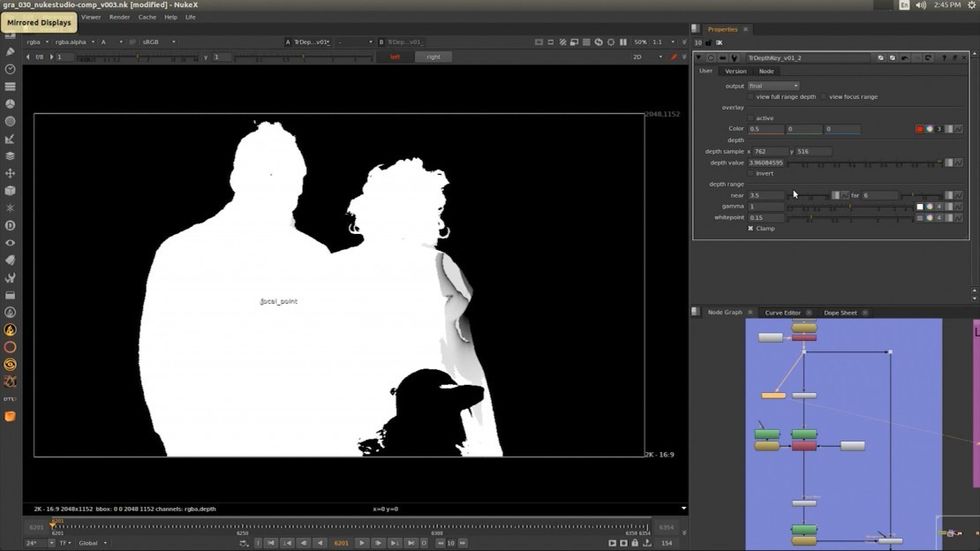

The technique, which is known as light field photography, is not simply enabling control ex post facto over shutter speed or frame rate: the implications for visual effects are huge. You can "slice" a live scene by its different "layers." Every shot is now a green screen shot. But it's not an effect, per se; as Karafin notes, "it's not a simulation. It's not a depth effect. It's actually re-ray-tracing every ray of light into space."

To fully understand the implications of light field photography requires a lengthy video demo… so we released one (watch our 25-minute Lytro walkthrough above). Karafin gave us a live demonstration of "things that are not possible with traditional optics" as well as new paradigms like "volumetric flow vectors." You can tell the demo is live because the CPU starts heating up and you can hear the fans ramp up in our video… and the computer was on the other side of the room.

You can "slice" a live scene by its different "layers." Every shot is now a green screen shot.

Visual effects implications

If light field photography fails to revolutionize cinematography, it will almost certainly revolutionize visual effects. Current visual effects supervisors tag tracking markers onto various parts of a scene—think the marks you often see on a green screen cyc—so that they can interpret a camera's movement in post. The Lytro camera actually knows not only where it is in relation to the elements of a scene—because of its understanding of depth—but where the subjects are in relation to the background (and where everything is in between). This depth data is made available to the visual effects artists, in turn making their integration of CGI elements much more organic because now everything in the scene has coordinates on the Z-axis. They're not matteing out a live-action person to mask out a CGI element; with Lytro they are actually placing the CGI element behind the person in the scene. And green screening takes on a new meaning; as you can see in the demo, it's no longer chroma- or luminance-based keying, but is instead true "depth-screening." You can cut out a layer of video (and dive into more complex estimations for things like hair, netting, etc). With Lytro, you don't need a particular color backdrop to separate the subject; now can you simply just separate the subject based on their distance from other objects.

Every film is now (or could be) Stereoscopic 3D

Stereoscopic 3D is another matter entirely. To shoot in 3D currently involves strapping two cameras together in an elaborate rig, with a stereographer setting and adjusting the interaxial separation for every shot (or doing a 3D "conversion" in post, with artists cutting out different layers and creating parallax effects manually, which yields inferior results). The Lytro camera, because it has millions of micro lenses scanning a scene from variegated perspectives, can do 3D in post without it being a simulation. You don't need to shoot on two cameras for a 3D version and then just use the left or right camera for the 2D version. With Lytro you can set the parallax of every shot individually, choose the exact "angle" within the "frustrum" you want for the 2D version, and even output a HFR version for 3D and a 24P version for 2D—with the motion blur and shutter speed of each being "optically perfect" as Karafin notes. Even if you shoot your film on a Lytro only with 2D in mind, if advances in 3D display technology later change your mind (glasses-less 3D anyone?), you could "remaster" a 3D version that doesn't have any of the artifacts of a typical 3D conversion. With Lytro you're gathering the maximum amount of data independent of the release format and future-proofing your film for further advances in display technology (more on this in a bit).

What light field photography doesn't change

The art of cinematography—or as many of its best practitioners deem it, a "craft" as opposed to an art—is not limited to camera and lens choices. Cinematography is often referred to as "painting with light," and the lighting of a scene, with or without a Lytro camera, is still the primary task. While Lytro is capturing depth info, to my understanding the actual quality and angle of light is being interpreted and captured but is not wholly changeable in post (it's also possible that, if it is, it simply went over my head). As Karafin notes, Lytro's goal with the cinema camera (as opposed to their Immerge VR technology, which allows a wholly moveable perspective for virtual reality applications) is to preserve the creative intent of the filmmakers. This means your lighting choices and your placement of the camera are, for the most part, preserved (the current version of the cinema camera can be "moved" in post by only 100mm, or about 4 inches). As a director you are still responsible for the blocking and performances. As a cinematographer you are still responsible for all of the choices involved in lighting and camera movement.

This is part of a continuum. As cinematography has transitioned into digital capture, it has in many ways become more of an acquisition process more heavily involving decisions made in post. The Digital Intermediate gives the colorist a greater amount of control than ever before. Cinematographer Elliot Davis (Out of Sight, The Birth of a Nation) recently told NFS if he could only have one tool besides the camera, it would be the D.I., not lights or any on-set device. Lytro is maximizing the control filmmakers have in post-production but it is not actually "liberating your shots from on-set decisions" (our fault for not fully understanding the technology, pre-demo).

The short test film Life

I can already hear people reacting to some of the shots in the demo with "but this effect looks cheesy" or "that didn’t look realistic." The same can be said for any technique used by inexperienced hands; think of all the garish HDR photographs you've seen out there. With those photographs, HDR itself isn't the issue, it's the person wielding the tool. And in the case of Lytro, there is no such thing as an experienced user. The short film Life will be the first. Even in the hands of experienced Academy Award winners like Robert Stromberg, DGA and David Stump, ASC (along with The Virtual Reality Company) the Lytro Cinema Camera is still a brand new tool where the techniques and capabilities are unknown.

The success or failure of Lytro as a cinema acquisition device has little to do with how Life turns out, given the technological implications extend far beyond one short film. Lytro has only publicly released this teaser, but the full short film will be coming in May:

Cameras aren’t done

On our wrap-up podcast from NAB, my co-host Micah Van Hove posited that "cameras are done." His thesis was that the latest video cameras have reached a rough feature parity when measured by traditional metrics like resolution, dynamic range, frame rates, and codecs. We deemed it a "comforting" NAB because as filmmakers there wasn’t something new we had to worry about that was going to make our current cameras obsolete.

And then the next day I went to the Lytro demo. So much for "nothing new." And when it comes to making current cameras obsolete… you can see a scenario where the future, as envisioned by Lytro, is one of light field photography being ubiquitous. Remember when you distinguished a camera phone as being different from a smart phone (as being different than a "regular" phone)? Now that the technology is mature they’re just "phones" again. With handheld mobile devices, features like capturing images and the ability to use the internet have become part of what’s considered "standard." Similarly, maybe light field photography will just be called "photography" one day. Is it only a matter of time until all cinematographers are working with light fields?

"Our goal is ultimately to have light field imaging become the industry standard for capture. But there’s a long journey to get there."

Democratization of the technology

Right now the tools and storage necessary to process all of this data are enterprise-only. The camera is the size of a naval artillery gun. It is tethered to a full rack of servers. But with Moore's law and rapid gains in cloud computing, Lytro believes the technology will come down in size to the point where, as Karafin says, it reaches a "traditional cinema form factor."

If Lytro can also get the price down, you can see a scenario where on an indie film—where time is even shorter than on a larger studio film—the ability to "fix" a focus pull or stabilize a camera without any corresponding loss in quality would be highly desirable. That goes for documentary work as well—take our filming of this demo, for example. The NFS crew filmed what will end up being over a hundred videos in 3.5 days at NAB. To take advantage of the opportunity to film this demo we had to come as we were—the tripod was back in the hotel (the NAB convention center is 2,000,000 square feet so when you’re traversing the floor, you are traveling light). As a result, I’m sure viewers of our video demo above will notice some stabilization artifacts on our handheld camera. Had we captured the demo using light field technology, that stabilization could be artifact-free.

In filmmaking, for every person working in real-world circumstances, where time and money are short and Murphy’s Law is always in effect, there are ten people uninvolved in the production who are quick to chime in from the sidelines with, "that’s not the way you’re supposed to do it." But experienced filmmakers know that no matter how large your budget or how long your shooting schedule, there is no such thing as an ideal circumstance. You try to get it right on the day, with the personnel and the equipment you have on hand, but you are always cleaning up something after the fact. Even Fincher reframes in post. Lytro allows for much more than reframing.

The ability to "fix everything in post" is surely not the primary goal of Lytro (see "storytelling implications" below), but it’s an undeniable offshoot of capturing all of this data. And should the technology be sufficiently democratized, it would be enabling for all levels of production.

For anyone opposed to this greater amount of control, let me ask: do you shoot with LUTs today? If so, you are using a Look Up Table to help the director and cinematographer see their desired look on the monitors during the shoot, but that look is not "baked in." In post, you still have all of the RAW data to go back to. In a way, to argue against Lytro’s ability to gather the maximum amount of data and retain maximum flexibility in post would also be to argue against something like shooting RAW (not to mention that celluloid could be developed in any number of ways… which always took place after shooting).

With these display advances, storytelling will change—just as it did when the basic black-and-white motion picture added sound, color, visual effects, and surround sound.

Corresponding advances in display technologies

Imaging isn’t going to advance only from an acquisition standpoint; there will be a corresponding advance in display technologies. Taking all of this information and displaying it on a flatscreen monitor (even a conspicuously large one) feels like a compromise. Lytro surely isn’t aiming to bring the best-looking images to the existing ecosystem of 50" flat screens; they must be thinking way beyond that.

Imagine your entire wall is a display, not just a constrained rectangle: you're going to want that extra resolution, going well past 4K. Imagine you can view material in 3D without glasses or headaches: you're going to want the depth information and control. Imagine you're wearing a headset and you can look around inside of the video (and each eye, in stereoscopic 3D, effectively doubles your resolution needs): you’re going to want all of these things together.

What are the storytelling implications of Lytro?

With these display advances, storytelling will change—just as it did when the basic black-and-white motion picture added sound, color, visual effects, and surround sound (I was going to add 3D to the list but there’s still so much backlash against it, and the technology is still in its infancy, that it’s not a great example… yet). Suffice it to say that the experience of watching Clouds Over Sidra on a VR headset is entirely different than watching it contained within the boundaries of small rectangle on a flat screen. The storytelling was informed by the technology.

In an era where video games and computer-generated animation are seemingly advancing faster technologically than traditional filmed live-action, Lytro shows there are still plenty of new techniques in the “live action” toolbox. But on its own a tool does not change anything; it will always come back to the same age-old question of,can you tell a story with it? Lytro is a newfangled, technically complex, eyebrow-raising, mind-blowing tool. But, as ever, what you do with it is up to you.

No Film School's complete coverage of NAB 2016 is brought to you by My RØDE Reel, Shutterstock, and Blackmagic Design.

More of No Film School's coverage from the NAB showroom floor: