Who Needs a $20,000 Lens? New Technique Creates Amazing Images from Cheap Lenses

If you've taken photos or video with a cheap prime lens, like a newer 50mm f/1.8, you might have been surprised by how good the lens performed. That's because lenses have been designed with the help of computers for some time now, and even cheap lenses can correct for many of the issues that must be accounted for to get a sharp and error-free image. But lens development for the same sensor sizes can only get so good, and if you want perfect lenses, like Zeiss Master Primes or Leica Summilux-Cs, the cost is very, very high. What if we're going about this all wrong though, and we should use the considerable power of the cameras or post-production to make our lenses essentially perfect? That's exactly what the SimpleLensImaging research group is working on.

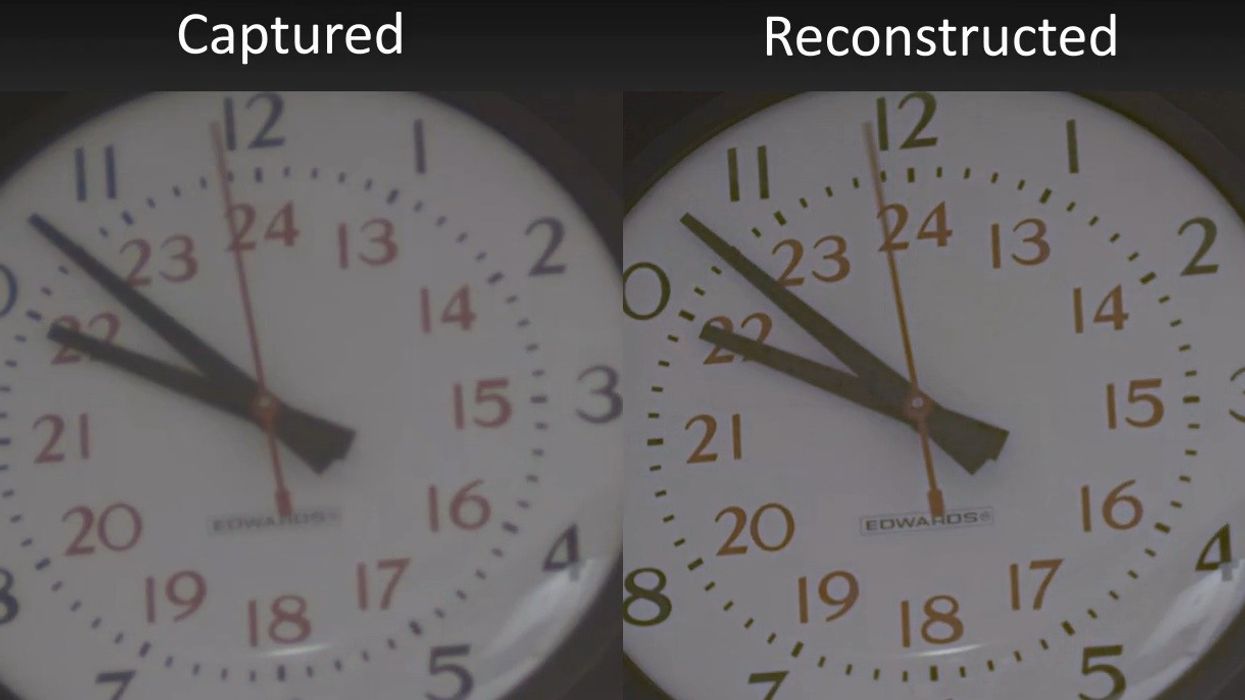

The group has been able to take an extremely cheap single element lens and have created fantastic images from it using a complicated algorithm:

Modern imaging optics are highly complex systems consisting of up to two dozen individual optical elements. This complexity is required in order to compensate for the geometric and chromatic aberrations of a single lens, including geometric distortion, field curvature, wavelength-dependent blur, and color fringing.

Many cameras already correct internally for specific lenses, and some can even do it for video -- but this is a whole other situation entirely. While we've got certain products like Metabones that actually improve performance as long as the lenses were designed for a larger format than the sensor, lens development has more or less plateaued for lenses under $2K or $3K. That's not to say lenses aren't getting better, they absolutely are, but most of the improvement over the last 5-10 years has been in zoom lenses, which until recently have lagged behind in performance compared to prime lenses.

Cameras will reach a point where they have way more processing power than they need, and they'll be able to perform all sorts of calculations in real-time, for photo and video. Lenses won't need to cost $20,000 to be perfect, because cheap lenses will be able to perform almost as well thanks to these kinds of algorithms. That doesn't mean there won't be benefits in having quality lenses. Getting something right optically in the first place even with excellent algorithms may still prove to be better quality, but something like what is being done in the video above helps close the gap tremendously.

You can read more about the techniques here.

Link: High-Quality Computational Imaging Through Simple Lenses