AWS SIGGRAPH 2021 Looks to the Past to Predict the Future of Content Production

What does the history of CG tell us? Where we are going. And it looks like we’re all heading to the cloud, for the sake of a better entertainment industry.

This is sponsored content brought to you by AWS.

At SIGGRAPH 2021, the most renowned computer graphics (CG) technology conference in the world, Netflix, Weta Digital, Company 3, Epic Games, and Amazon Studios join Amazon Web Services (AWS) to take audiences on a trip “Through the Looking Glass: the Next Reality for Content Production.”

Thanks to the pandemic, every Tom, Dick, and Harriet now knows the basics of putting on a virtual event. But there’s a huge leap from a custom background on Zoom to a fully-blown LED volume wall virtual production that’s safe, remote, and can be used for high-caliber productions.

Upping the game for trade show keynote experiences, “Through the Looking Glass” is a microcosm of what’s happening in the industry overall.

Not only is the keynote a clever display of leveraging cloud services for virtual production, but it’s also a tour of the history of CG, and an exciting analysis of how to prepare for where content creation is heading tomorrow.

Hosted by AWS’ Eric Iverson, CTO for AWS Media & Entertainment, the keynote pulls together leaders from Netflix, Weta Digital, Company 3, AWS, Epic Games, and Amazon Studios, and analyzes today’s CG landscape and where we are heading.

How CG has been democratized in only a few decades of innovation

When you look back at the exponentially innovative history of CG, you get a sense of how quickly we’ve gotten here. What does the CG of yesterday tell us? Where we are headed tomorrow. Here’s a snapshot from the keynote.

The decade of the 1970s conjures images of disco and bell-bottoms. But it was also the decade when the CG industry was born. It saw the launch of the first SIGGRAPH conference, the creation of the first 3D animated hand, Atari’s release of “Pong,” and of course Star Wars, which inspired a whole generation of creatives.

A Computer Animated Hand was the name of Ed Catmull and Fred Parke’s revolutionary 1972 short film:

As the 1980s crept in, CG experiences continued to advance. Think the Nintendo Entertainment System and Apple’s Macintosh personal computer.

In the 1990s, we saw the formation of Epic Games and Pixar, the launch of Adobe Photoshop, and the debut of Terminator 2 and Toy Story.

Terminator 2 showed us the pioneering CG work of James Cameron, including the T-800 endoskeleton arm:

As the millennium rolled in, so did AWS, with a vision for cloud-based computing. Personal computers with meaningful compute capabilities became more widely accessible and filmmakers began to use advanced production techniques, such as real-time rendering on-set for Avatar and artificial intelligence in The Lord of the Rings.

In the 2010s, cloud-based computing really started to become the norm. Why? Shot complexity and volume increased dramatically, and studios could only handle that by offloading burst render workloads.

To illustrate the point, Marvel’s billion-dollar blockbuster Avengers: End Game alone featured 2.5 times more VFX shots than Iron Man, which was released a little more than a decade prior. In 2018, Untold Studios became the first fully cloud-based creative studio, blazing the trail for other studios to follow suit.

Marvel's Avengers: End Game shows us where we are with computer-generated hands today:

What does this fast growth mean? It’s leading us in one direction, and the natural outcome is cloud technology.

The Tipping Point of Modern Content Production

At the start of 2020, all the necessary tools for cloud production were ready. But frankly, content creators were not. At the onset of COVID-19, that changed because everyone had to rethink how to plan, shoot, and edit projects.

Studios who integrated the cloud whether via hybrid or all-in scenarios not only survived, but capitalized on unprecedented opportunities.

Here’s a glimpse of a few companies who shared their current production strategies in the keynote.

Why Netflix has embraced the rise of remote work

For decades, studios wanted artists to be physically present to work on projects, so they recruited talent based on geographic location. Now, cloud-based remote production workflows provide access to a larger talent pool where artists can choose projects—without uprooting their families!

For Netflix, the cloud has been integral to success. Netflix connects with artists globally using its NetFX cloud-based pipeline, which provides the infrastructure needed to create Netflix-caliber projects.

According to Netflix Director of Engineering Rahul Dani, who oversees NetFX, the pipeline has been deployed on 35 productions spanning four continents. It’s allowed the team to tell more authentic stories and creatives to be more imaginative.

Laura Teclemariam, Director of Product, Animation at Netflix, believes global production is here to stay, and companies that don’t lean in will be left behind.

How Weta Digital is keeping up with the insatiable demand for VFX and animation

The demand for compelling visuals is outstripping the pace at which creators can deliver to studios and streaming services. To ramp up capabilities, Weta Digital has a two-pronged approach: empower more artists and harness infinite computing power.

Alongside a shift from on-premises resources to AWS, a move announced in late 2020, Weta is developing tools that increase creativity by decreasing the time needed for work that can be automated.

For example, Weta Digital CEO Prem Akkaraju mentions the studio’s proprietary tree growth software that generates geographically accurate forests, which was used on the Planet of the Apes franchise.

And if you are impressed by the digital trees, the next logical step is digital humans, from “Gollum” to a 23-year-old version of Will Smith featured in Gemini Man.

Akkaraju explains how studios can leverage Weta tools via WetaM, a cloud-based software, as a service offering that was recently announced in partnership with Autodesk, to make this leap.

How Amazon Nimble Studio puts artists first

The idea for Nimble Studio was sparked when Rex Grignon accessed his office computer from a training room. Once he realized that a powerful workstation doesn’t need to be located under your desk to do work, he started learning about the cloud.

As Director of Amazon Nimble Studio Go to Market at AWS, Grignon now works to ensure that it provides a frictionless yet customizable experience for all artists. That inclusive spirit has paved the way for AWS’ support for important industry organizations promoting open source and diversity, including the Academy Software Foundation (ASWF), the Blender Foundation, and Women in Animation, of which AWS recently became a corporate member.

In fact, AWS CG Supervisor Haley Kannall announced the exciting new partnership during the keynote, citing the importance of having women and other underrepresented communities in decision-making roles!

Why the future depends on making technology intuitive for all filmmakers

James Cameron and Robert Zemeckis have been pioneering virtual production for decades. But for game-changing innovation now, it has to get into the hands of filmmakers who don’t have multi-million-dollar budgets.

Amazon Studios’ Ken Nakada, who serves as Virtual Production Supervisor of Prime Video, and Epic Games Industry Manager David Morin, found significant common ground in the anticipated direction of the technology: virtual production technology needs to become more intuitive.

Why? Because it’s only when a broader set of filmmakers, and every production department, can access it that significant progress happens. And that progress is going to happen very, very soon.

Inside the keynote: technology in action

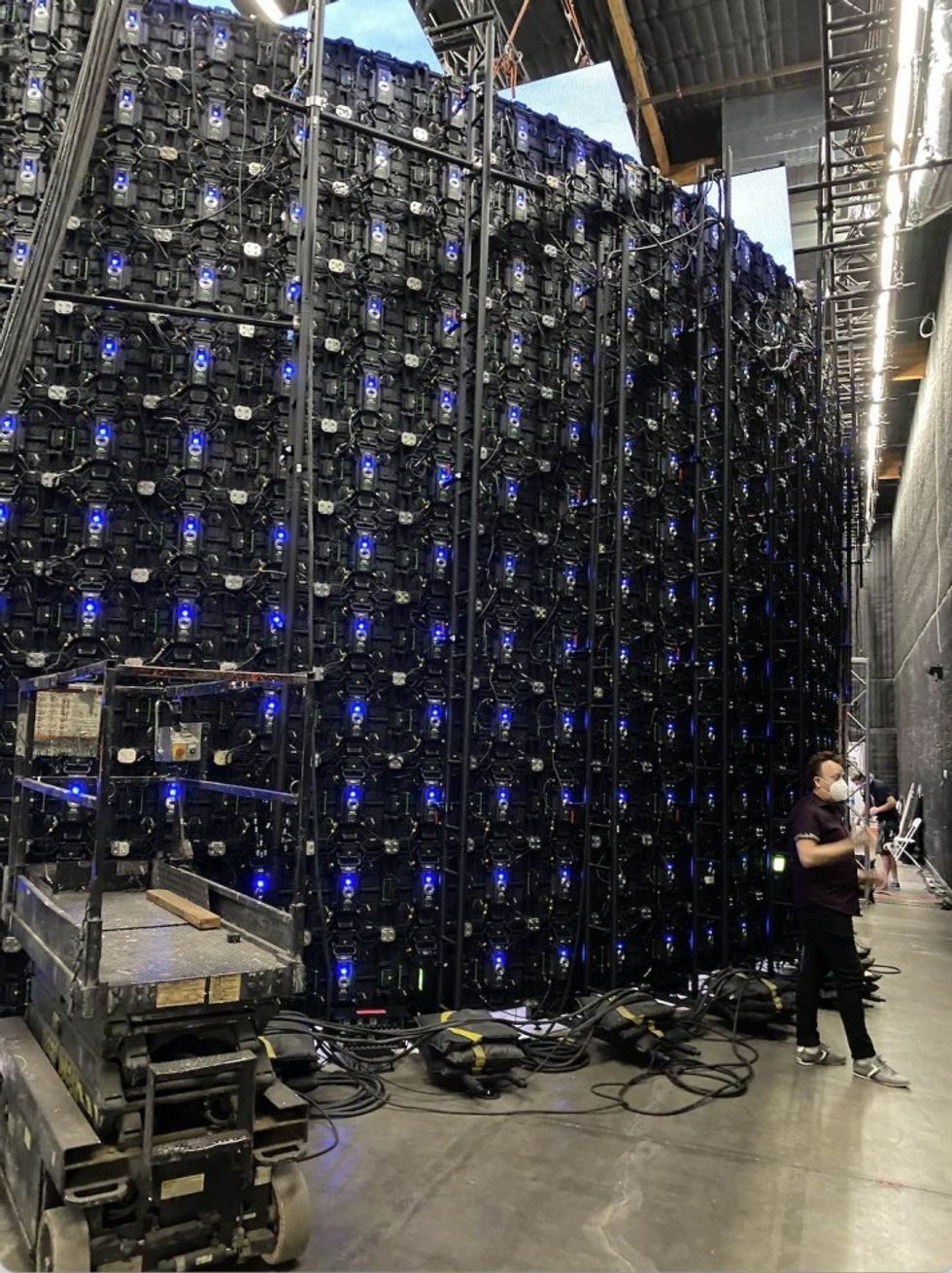

“Through the Looking Glass: the Next Reality for Content Production” profiles cutting-edge content innovation, and it’s shot that way too.

After host Iverson’s digital backdrop changes to reflect the advancements of each decade, he embarks on a stroll through a leafy park that is in fact recreated on a virtual production stage.

Captured on the XR Stage at 204 Line facility in Pacoima, CA, the presentation was made possible by ICVR and Brendan Bennet Productions.

Directed by Scott Kelley, with ETC Head of Adaptive and Virtual Production Erik Weaver as Executive Producer, it was filmed with a three-camera setup. (An unconventional approach, considering most virtual productions employ a single camera.) Epic Games’ Unreal Engine was used for real-time rendering, and the dynamic digital park environment was created by ICVR, which leveraged a range of AWS services during production.

Using AWS, ICVR was able to iterate on the environment, with a remote team working across time zones to ensure the visuals closely matched the physical set, which included foliage, a bench, and dirt flooring.

The shoot’s three-camera setup included an ARRI Alexa Mini and two Sony Venice cameras. With 270-degrees of displayed imagery across more than 35 million pixels, the stage featured ROE Visual BP 2.8mm LED panels in a curved configuration for linear display, and Planar Systems LED panels for ceiling imagery, with LED video processing handled by Brompton 4K Tessera LED processors.

Motion tracking was achieved with Stype. AWS services used by ICVR to create the background imagery include Amazon Simple Storage Service (Amazon S3), Amazon Elastic Compute Cloud (Amazon EC2), and Amazon Route 53. ICVR also used AWS Fargate serverless compute engine and Amazon Cognito for managing their proprietary RendezVu interactive 3D world app.

Want to see the keynote and more cutting-edge insight from industry insiders?

You can check it out for free via the SIGGRAPH website until Oct. 29, 2021. All you have to do is visit AWS and register for the complimentary code here.