Want a Desktop Video Supercomputer? New NVIDIA & AMD GPUs May Make It a Reality

NVIDIA GeForce GTX Titan Z

Apparently featuring 2,880 cores and 6GB of VRAM per GPU (for a total of 5,760 processing cores and 12GB VRAM between both GPUs), the Titan Z is, according to AnandTech, "NVIDIA's obligatory entry into the dual-GPU/single-card market, finally bringing NVIDIA’s flagship GK110 GPU into a dual-GPU desktop/workstation product." As of right now, core clock rate and power consumption for the card have not been announced, but AnandTech had this to say based on other available information:

The GPU clockspeed [should be] around 700MHz, nearly 200MHz below GTX Titan Black’s base clock (to say nothing of boost clocks). NVIDIA’s consumer arm has also released the memory clockspeed specifications, telling us that the card won’t be making any compromises there, operating at the same 7GHz memory clockspeed of the GTX Titan Black.

Tom's Hardware has the following to add (citing the video below but restated here for emphasis):

Huang compared the new Titan Z to Google Brain, which features 1,000 serverspacking 2,000 CPUs (16,000 cores), 600 kWatts and a hefty price tag of $5,000,000 USD. A solution using Titan Z would only need three GPU-accelerated servers with 12 Nvidia GPUs total.

The following video was featured on the NVIDIA blog.

I'm pretty sure we all hope each card is assembled like a Transformer, too. As mentioned, the launch price for the Titan Z is slated at $3000. Pricing for the GPU teased by AMD last week, on the other hand, has not yet been announced. Speaking of which...

AMD FirePro W9100

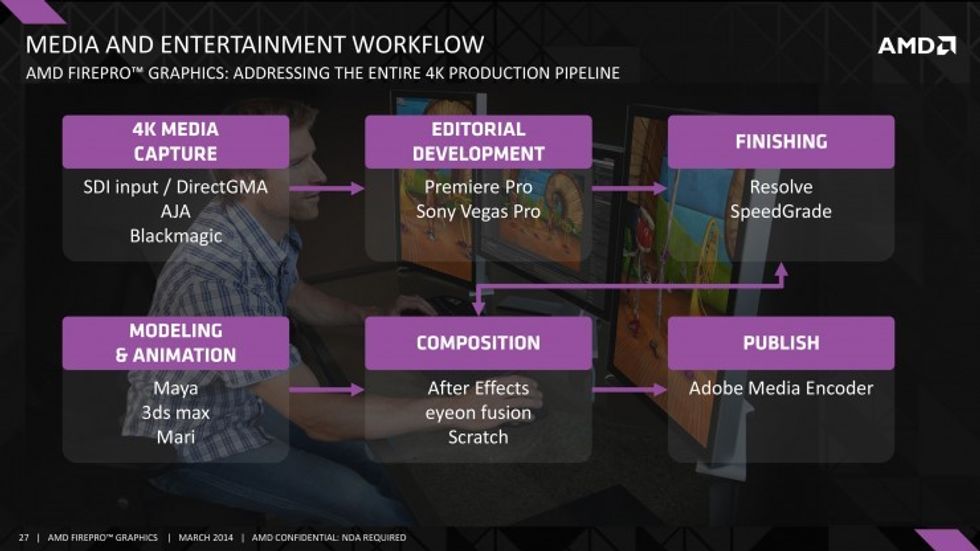

Though not a dual-GPU card, the FirePro W9100 isn't playing around either -- featuring an impressive 16GB of VRAM with 2816 processing cores, AMD's announcement specifically targets high-end production (among other things) as a field which stands to benefit from the card. Once again AnandTech has the following to say: "AMD is banking on their 16GB of VRAM giving them a performance advantage [in 4K video encoding and image processing] due to the larger working sets such a large memory configuration can hold." Case in point:

A Faster & More Optimized Future

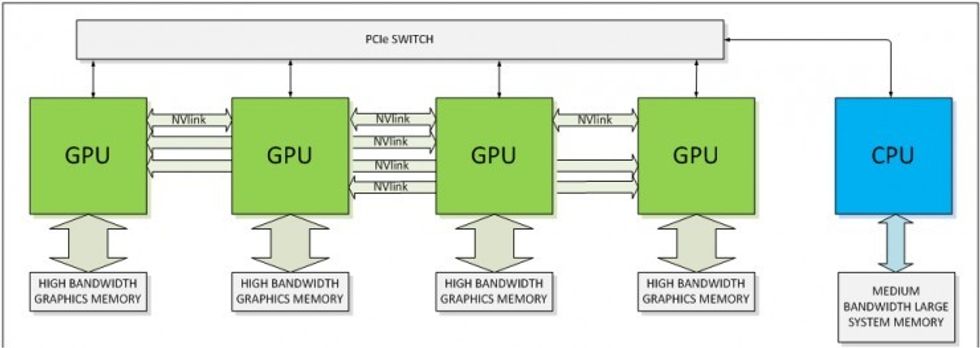

In yet another interesting announcement in the graphics world last week, NVIDIA outlined a revised product roadmap for its GPU lines. Apparently, the GPU architecture the company plans to unleash in 2016, affectionately dubbed Pascal, will feature several previously announced features. The feature of Pascal not unveiled earlier is perhaps its most interesting one: it will connect via a totally new interface dubbed NVLink. According to AnandTech NVIDIA "is looking at what it would take to allow compute workloads to better scale across multiple GPUs." And, apparently the 16GB/s (at 8 gigatransfers per second, or 8GT/s) of PCIe 3.0 -- or even the proposed 16GT/s of the upcoming PCIe 4.0 -- are not and will not be sufficient for Pascal to communicate among members of its family optimally.

Considering each card's ability to access memory at rates which may exceed 250GB/sec, it's not terribly difficult to see why NVIDIA would want a wider pipe. In terms of bandwidth NVLink will feature 8 lanes shoveling 20Gb/s apiece, meaning 20GB/s overall (according to AnandTech this also equates to 20GT/s). NVIDIA won't be abandoning PCIe by any means, as some proposed implementations envision multiple GPUs interfacing to each other via NVLink, but to CPU via PCIe; others see one or several GPUs talking to the CPU directly via NVLink. One version of the former is plotted out below:

Back to the Titan Z and FirePro W9100: it's unclear how these two workstation graphics units will stack up again each other, in terms of both performance and price. Neither card's clock rate is presently available, and the AMD unit's cost is also a question mark. To give you some kind of idea, the FirePro W9100's predecessor, the W9000, is going for $3400 on Newegg right now. These are pieces of hardware clearly not geared toward the casual user, and $3K is no amount to be brushed off. This is not to mention that we're living in an age of global shutter, interchangeable lens, 12-stop Super35 sensor camera systems shooting 4K directly to post-ready codecs that a filmmaker could purchase for the same price. Well, at least one.

With that said, this is also a file-based, data-centric age of filmmaking in which computers are ubiquitous and essential in basically every stage of production. The justification for spending $3000 on a single piece of computing hardware isn't so unlike that of spending the same amount for a solidly-spec'd camera as part of a gear kit. If it enables, and furthermore, facilitates, the creation or manipulation of creative media (and hopefully pays for itself in the process), it may be worth it for you. On the other hand, if all that wild business about gaming on 4K displays at maxed-out settings and frame rates is a necessity for you, one of these cards may be just as valuable.

For all the technical details, check the links below. Many thanks to AnandTech for the information and images.

Links:

- NVIDIA Announces GeForce GTX Titan Z: Dual-GPU GK110 For $3000 -- AnandTech

- AMD Announces FirePro W9100 -- AnandTech

- NVIDIA Updates GPU Roadmap; Unveils Pascal Architecture For 2016 -- AnandTech

"'Back Home"via Mercedes Arutro

"'Back Home"via Mercedes Arutro 'Back Home'via Mercedes Arutro

'Back Home'via Mercedes Arutro