'The Truth About Killer Robots': How to Build a Sci-Fi Documentary

Maxim Pozdorovkin's HBO film asks, "When a robot kills a human, who is to blame?"

In 1942, writer and biochemistry professor Isaac Asimov theorized that in a future that saw the emergence of artificial intelligence, humans would need to agree upon some ethical standards for robots. He developed the Three Laws of Robotics:

- A robot shall not harm a human nor allow a human to become harmed through inaction.

- A robot shall obey a human so long as the orders do not interfere with the First Law.

- A robot shall protect itself so long as it does not interfere with the two previous laws.

As Maxim Pozdorovkin's documentary The Truth About Killer robots demonstrates, these laws have already been broken— and more problems have been created in their wake. We've advanced technologically, but we're not the wiser for it. Or, as Asimov wrote, "Science gathers knowledge faster than society gathers wisdom."

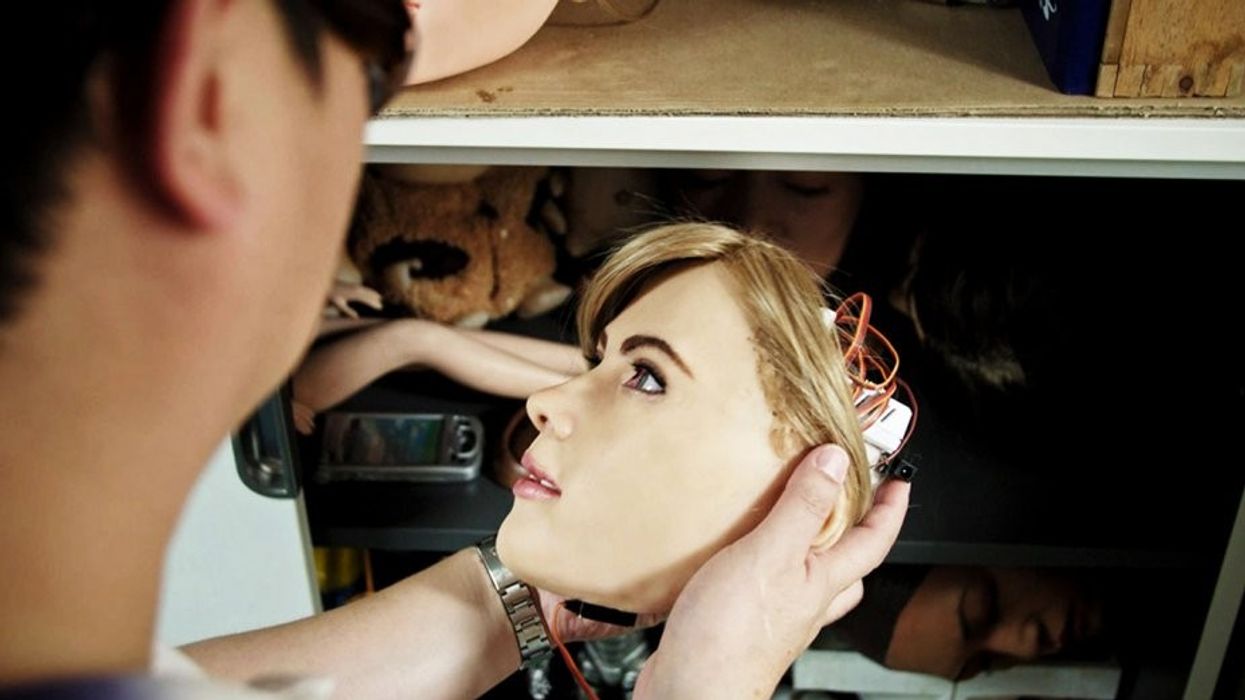

The film, which is now streaming on HBO, begins with the story of a German worker in a Volkswagen plant who was pinned against a wall and crushed to death by a robotic arm. We hear about it from a robot herself—our narrator, called Kodomoroid. With her automaton voice as a guide, we'll travel to the world's largest economies to discover how automation is replacing workers at an astonishing pace, and how the dangers artificial intelligence pose are much more nuanced and complex than simply causing human death.

No Film School caught up with Pozdorovkin to discuss the ethical quandaries he raises in his film, how he engineered his film to play like a sci-fi genre film, and more.

No Film School: What fascinates you about robots and automation?

Maxim Pozdorovkin: For a long time I've been fascinated by the idea of automation and how you make a film about it. I wanted to make a movie about structural labor economics, which is the most boring subject imaginable for a film. So I tried to see how you could do that in an interesting way.

Almost everything that I saw and read about AI and robots had a lot of interesting points but was unsatisfying. I realized that the big blind spot was that almost all of it was premised on this marketing idea of what robots can do for us. I realized that what I was interested in is what robots could do to us—the way they transform us.

Every single time there's some kind of accident [with AI], we talk about this thing in the future that might harm us. We're not thinking about the way that the processes that underlie it are harming us already and broadly transforming society. When an accident happened in Germany at the Volkswagen plant where a robotic arm crushed a worker, I was looking at all the news responses, and everyone jumped to the science fiction trope of the Terminator. But the word "automation" wasn't even mentioned. I went to Germany and met with a lot of the factory workers, and it was fascinating because they were forbidden from discussing the accident, but they were all very glad to talk about the way that their work environment has been transformed over the last 30 years.

I thought that there was this way of making a film that looks at automation as a cause of death—a kind of metaphorical death, the dehumanizing process. I wanted to think about AI not as this distant thing, but as a continuation of the automation process that we've been on for a long time—to see it as a historical continuum.

Pozdorovkin: Asimov's laws are these fascinating shorthands that people don't actually read into. He starts by predicting a future, which I think is 2052, where we will have laws to regulate the legal complications that arise any time one of these [robot] accidents comes up. I thought he was very prescient. But if you actually read through the stories, all of them are essentially examples of how those laws will inevitably fail in any society. In other words, the stories themselves exemplify all these complications and problematic gray areas that will inevitably arise even if the laws are there and are applied.

So for me, his laws were a really big way of trying to organize the film around this trope of science fiction, but make it into something like a panorama of the labor market, as well.

"I try to make films about relevant subjects, but think about them through film genres."

NFS: Aesthetically and tonally, you did approach this from a sci-fi, futuristic angle. How did you figure out that you wanted, for example, a robot to be the narrator, and all the other touches that came together to make the film feel like a dystopian sci-fi?

Pozdorovkin: I find that one of the main shackles of documentary film is a certain kind of obsessive literalness and earnestness. That shackles it to journalism.

I try to make films about relevant subjects but think about them through film genres. Speaking about these subjects through film genres and techniques allows you to feel and see the subject in new ways that you might not have otherwise. So I'm always looking for cinematic genres as a way of getting at these other more qualitative, feelings-based subjects.

For this film, there's a certain uncanniness and discomfort that we feel when we're confronted with this dehumanizing process of automation. A lot of that is overlooked when people just report on the job numbers. For example, de-skilling [is resulting in] the loss of significant attributes, like memory and spatial orientation, that we've developed over millennia. But how do you actually represent that and not just explain it to people?

Specifically, in terms of the [robot] narrator, that was also a response to a lot of the stuff that I saw in the handling of AI and robots as a subject in film. There are so many films about robots and AI that are premised on these ideas, like, "Can a robot direct a movie? Can a robot be a DP? Can an AI edit a movie?" They fail because these are really high-order cognitive tasks. That creates a certain false optimism of the [human] uniqueness of artists. I think that artists really do that to a great extent, but if you look at actual economic data, [automation] is happening to all these other industries: photography, music, film, journalism. It's happening everywhere.

I wanted to grapple with that basic fact, and one way of doing it is to use an android that was created to narrate the film. Also, it's cheaper than using an actor. You have much more flexibility. You don't have to pay for a studio. All those are typical reasons for automation.

Also, the film is a cautionary tale about thinking about what humanism really is, and the worst thing I could have done would have been to hire a James Earl Jones soundalike to add human gravitas.

If you think about the process of sci-fi writers go through, they look at the world around them and they think about certain objects or behaviors that they can tell will be ubiquitous in the future. Then they build a world out of that. An automated host is definitely something that will be common. It's something that we're gonna have to get used to. Just, I think, a week ago in China, there was an AI news anchor that was officially launched.

NFS: I want to go back a bit to the way that you focused on labor from a global perspective. When you begin the Tesla segment, you almost expect to hear from Elon Musk and the CEOs that are sort of perpetrating this change. You don't expect to hear from wage workers.

Pozdorovkin: It's like a true crime Trojan horse, with another idea inside.

From the beginning, that was the thing that I found so baffling. All of the discussions of the subject were the voices of the CEOs and engineers and professors—people who were the direct beneficiaries of the technology. My favorite example, which we have in the film, is this robot convention in Japan, in Tokyo, where they have android hostesses. There are all these real-life female stewards that are doing that same job and are very clearly under stress by these freakazoid androids. I saw more than 20 reports about this place not a single one of them managed to ask any of the women there what they thought about this.

Those kinds of blind spots are incredibly revealing in the way that we think about this issue. So I wanted [the film] to be a panorama of the labor market where, at each stage, you see the different aspects of it and the way that it transforms people's lives. [Automation and AI] is not a thing that you can say is good or bad, but it's something that we just need to grapple with. And te best way to grapple with it is to create a panorama of voices, a polyphony that kind of can speak to the way that the labor market in its entirety is changing. This was the reason that the film is set in the four biggest economies in the world.

NFS: Can you talk a bit about the production aspect of the film? How long did it take to get this off the ground?

Pozdorovkin: This was really a wonderful experience. HBO liked the development teaser I made. I knew I wanted to do a strange conceptual genre film, and they were remarkably nice and hands off for, I think, three and a half years. It wasn't like a verite film where you have a clear story that you're just embedded in and living. I was figuring it out as I went along. The fact that they went with that is to their credit, I think.

NFS: What was the biggest challenge that you encountered throughout the production process?

Pozdorovkin: When you're making a high-concept movie, such as this one, it's very hard to explain to people. It's about automation at the literal depth, but then it's really about the humanizing mechanization and metaphorical depth. This is very different from a plot-driven or a character-driven film. You need a central, unifying idea that drives the film. I think the edit is the hardest part of this kind of film.

NFS: Did you edit it yourself or did you work with an editor?

Pozdorovkin: I edited myself and with an editor, Isabel Ponte, in our production company. I also did the music with Matvey Kulakov. We needed to have a vibe and we were temp-ing with a lot of lo-fi electronics, and we just started writing the music alongside the film, and then ended up liking it.

Pozdorovkin: Yeah. Robot technological advancement will always be faster than the pace at which laws change. Given the current political situation, that will be doubly true. A lot of the cases in the film bring out these problematic aspects of robot presence in our lives in terms of culpability. There's this absolute dissolution of culpability across hundreds of companies and individuals. That is deeply problematic when you're talking about the military, especially.

NFS: The film raises other ethical questions about the future of AI. I'd never thought about this angle that the philosopher in the film brings up about AI's threat to human empathy: the moment we start fabricating or trying to recreate empathy, it loses its essence, or value, in humans.

Pozdorovkin: Yeah. That's a really important point, and I was glad that we were able to get it in the film. Philosophers sometimes call this imaginative understanding—it's the capacity that we have for understanding what it's like to be another person. There are very few tools that we actually have for seeing a person's world and knowing what they're going through. It is tone of voice, visual cues...the kinds of psychological cues that we receive from other people.

When you receive a robotic call and for that split second it catches you and you respond in a human way, you might say, "Oh, hey, how you doing?" Then you correct yourself, and you realize it's a robot. Every single time you do that, a human connection is undercut. Once we're constantly undercutting our faith in those impulses, it's deeply, deeply problematic because it's the social fabric. When you don't trust the tools that you need to make connection possible, it's a deeply, deeply troubling thing.

Another thought experiment like this: Say you're driving on the highway, and you're switching lanes. Now, you look in your side view mirror and you see someone going really fast. When it's a human, you essentially project fallibility on them. Maybe they're having a bad day, maybe they're crazy, whatever. You don't cut them off because of this potential fallibility. You don't want to ruin your day, you don't want to get into an accident.

But when you're surrounded by machines that are programmed to never run into you, you will behave like the biggest asshole towards those autonomous vehicles. Maybe initially there will be a period where you're cautious, but eventually, you will just cut them off. You will be completely inconsiderate towards them. But guess what? That transition period where it's both autonomous and human behind the wheel will be sort of lengthy. Not everyone's going to give up driving at the same time. What will happen is, inevitably, there will be a secondary feedback loop where you will start behaving worse towards other humans.

A lot of feminist philosophers have smart ethical objections to sex robots for the same reason. Inevitably, when you are interacting with a female android that's so lifelike, there will be a feedback loop in the way that you treat real women.

NFS: It's absolutely terrifying, even on a base level, when you start to deconstruct the human experience as a bunch of elements that can be replicated. You detach it from the subjectivity of what that feels like to have that human experience. I'm sure that changes person-to-person interactions.

Pozdorovkin: Yeah. I think a big problem is that we dumb down our own idea of what intelligence is. The second section of the film starts with the statement that the automation of the service sector requires your trust and cooperation, and that's a sentiment that obviously applies to Joshua Brown [the victim of the autonomous Tesla accident]. He became so dependent on his autonomous car that it cost him his life.