How to Make High-Quality Animated Sci-Fi in Realtime with Unreal Engine on a Budget

Think virtual production is the preserve of James Cameron? Find out how filmmaker Hasraf "HaZ" Dulull creates cinema-quality animated sci-fi in realtime using Unreal Engine, under lockdown, with a 3-person crew (plus voice actors and sound composer).

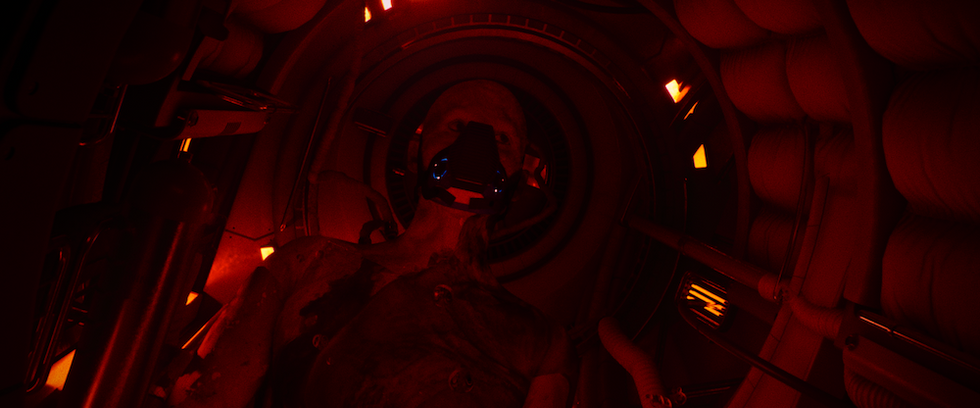

Having made sci-fi features 2036 Origin Unknown and The Beyond (both were on Netflix), as well as the action-comedy show Fast Layne (now available on Disney +), the UK-based director/producer Hasraf "HaZ" Dulull is establishing quite a profile. Neil Gibson, graphic novelist and owner of TPub Comics, reached out to him online looking for a director to create a proof of concept based on one of the publisher’s IP.

Dulull, who grew up watching anime, was asked by Gibson to read his graphic novel, The Theory, and pick a story in the anthology. HaZ was instantly attracted to Battlesuit.

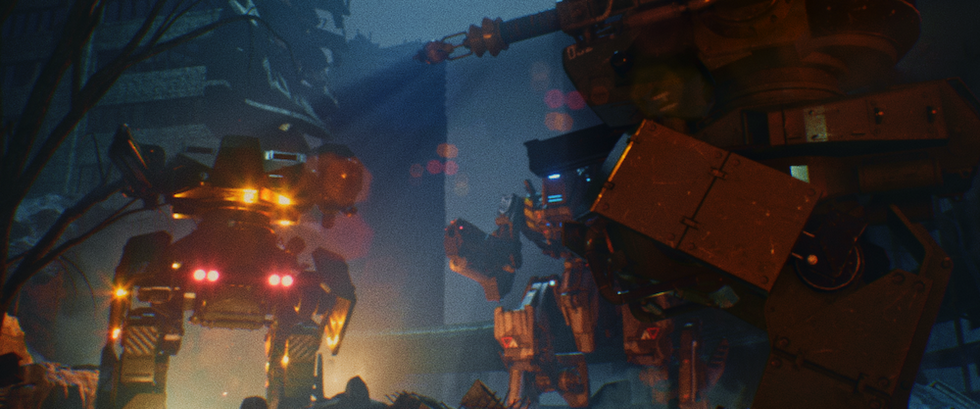

“I love big mecha robots,” Dulull says. “I’ve always wanted to make a film with big robots and I love a challenge.”

The catch: TPub imagined it as live-action… inside of 4 months to premiere at Comic-Con in London.

“Even if I’d worked 24 hours a day, I knew that on the small budget they had it just wouldn’t look that good. It was important to me and the audience, and also to TPub, that we give it the stamp of the highest quality we could to tell this story.”

Dulull suggested producing the story as an animation along the lines of Netflix’s Love, Death & Robots.

“They were very hesitant at first—thought it sounded very expensive, requiring a studio and a large team to do it well,” he says.

To convince them, Dulull made a mock-up in Unreal Engine.

“Virtual production is a gamechanger. Now indie filmmakers can use the same tools used by the big film studios and you can do it in your own home.”

“At the time [late 2019], I was prepping for my next sci-fi live-action feature, Lunar, which was supposed to go into pre-production in May 2020 [postponed due to COVID-19] and I’d begun to previz key sequences using Unreal Engine. I remember thinking to myself that the quality of the output from Unreal was good. It’s free to use and it’s real-time. I thought that with a bit more graphics power and love you could make actual narrative content this way.”

The reaction online when it was posted was positive and gave TPub the confidence to say, "Wow! if you can do 12 minutes of that we will leave you to it!”

The short test sequence was made using the paragon assets, which are free to download and use from The Epic marketplace

Setting the benchmark

The test informed Dulull that he could pull it off, but it also set expectations for TPub, too. “We decided to take a realtime approach mainly because of the low budget and tight schedule we had for this ambitious project," Dulull says. "We weren’t going to be making something that looks like Pixar but the benchmark was still high. Audiences don’t really care how much budget you have - it’s the end result that matters."

“The great thing about realtime CGI rendering is what you see is what you get. I was able to show how it would look regardless of budget.”

Production began during Christmas 2019 with a shoestring crew and agile work procedure. Joining Dulull was Unreal Engine technical director Ronan Eytan and artist Andrea Tedechi.

“I can see a future where realtime filmmaking is going to unleash a wave of new and fresh stories that not only feel big but are full of imagination and ideas that were previously deemed too risky and expensive to do.”

The storyline for Battlesuit followed the graphic novel, although the script was continually being refined by Gibson at TPub during the adaptation for the screen. Indeed, this was the publisher’s first foray into television production and animation.

“One panel of a comic book tells you so much, but to get that coverage in animation could require maybe 6 shots,” Dulull says. “That’s a different mindset for graphic novel writers to adapt, and Gibson was great to work with in making that transition from graphic novel to screen.”

It wasn’t as if Dulull was an expert at character animation either. True, he’d spent the early part of his career working in video games and then visual effects for movies like The Dark Knight. He was even nominated for several VES awards for his work as a Visual Effects Supervisor before becoming a director.

“I’ll be the first to admit I’m no character animator, which is why our core team and the approaches we each took were so important. We each had different skills.”

But there were other workarounds too, the likes of which Dulull believes could benefit other indie filmmakers.

Asset building

Rather than creating everything from scratch, they took a "kitbash" approach to building some of the key assets, by licensing 3D kits and pre-existing models (from Kitbash3D, Turbosquid and Unreal’s Marketplace). Tedechi used these as the base to build and design principal assets such as the Mech robots and the warzone environment. Dulull was able to animate the assets and FX in realtime within Unreal’s sequencer tool and begin assembling sequences.

Facial animation of the lead character was more of a challenge. They didn’t have the budget to have a full motion capture session with actors, so they bought “tonnes” of mocap data from Frame Ion Animation, Filmstorm, and Mocap Online and retargeted that data onto the body of the main characters.

“If someone had said I could pull off a project like this a few years ago... I’d think they were crazy and over-ambitious."

But they still needed to find a way of getting original performance on the face of the character.

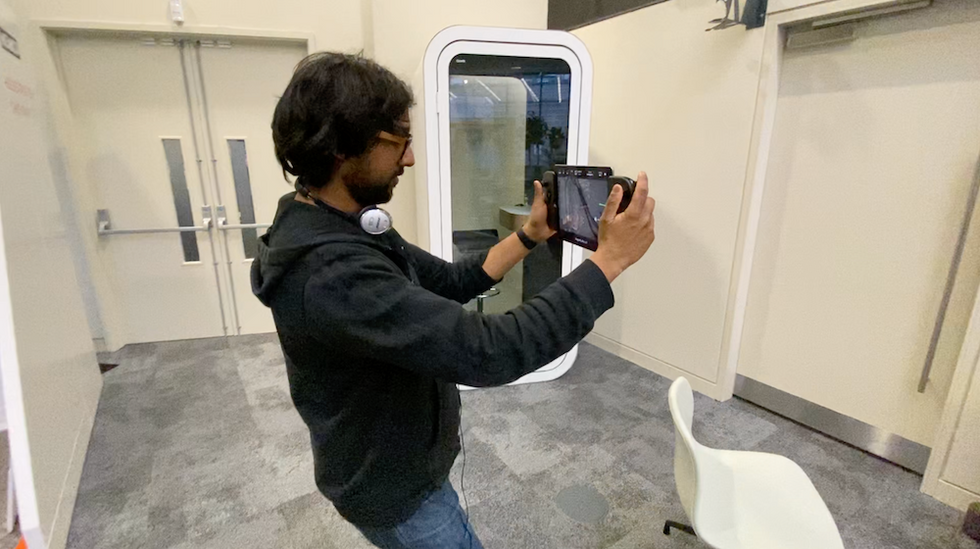

“Ronan had this genius idea of using the depth camera inside an iPad (used for facial recognition) to capture the movement of the face and eyeballs of our actor. He also developed a pipeline utilizing the live link in Unreal.”

Actress Kosha Engler, known for her voice work in video games such as Star Wars: Battlefront and Terminator: Resistance, was also the character’s voice artist. The team was able to record her voice and capture her facial performance at the same time in one session.

“We had to do some tweaks on the facial capture data afterward to bring some of the subtle nuances it was missing, but this is a much quicker way to create an animated face performance than trying to do it all from scratch or spend a fortune on high-end facial capture systems.”

Workflow under lockdown

By the time COVID-19 lockdown measures were announced across the UK in mid-March, they were already working in a remote studio workflow.

“The nature of the production and the tools we used allowed us to work from anywhere, including our home living rooms,” he explains.

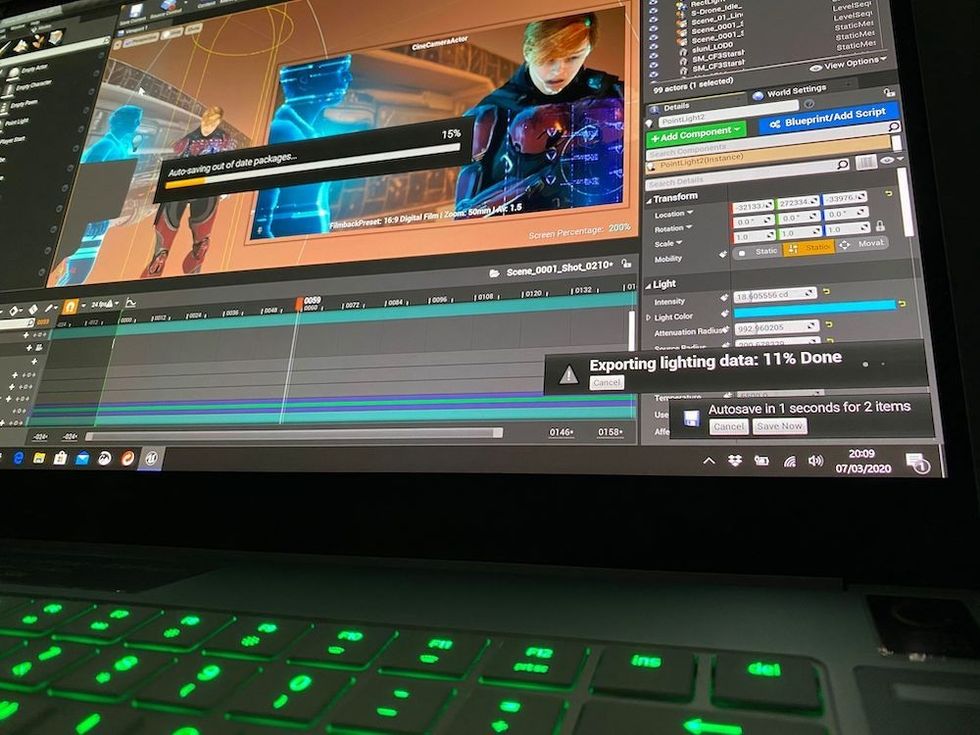

While Tedechi concentrated on building environments and robot assets and Eytan was busy with the character animation and pipeline side of things in Unreal, Dulull was exploring camera moves and creating each shot.

“My style as a director is to be hands-on and this project suited this perfectly. I would ask Andrea for specific assets, take those and build out all the shots, light them and animate before putting them into an edit (Davinci Resolve 16), while Ronan would rig the characters and troubleshoot any technical issues.”

They used a 1TB Dropbox as a back-up store and share repository and communicated via Skype screen sharing and WhatsApp. To keep production tasks and schedules on track, they used an online management system called Trello to help them coordinate the collaborative workflow and assign tasks.

“It’s far better than using email to communicate and tracking long email threads is a nightmare. Plus, Trello is free for a certain number of users. It’s like a big index card system (which also has Dropbox integration), so we could all make notes and move the cards around informing us who was using certain things. We also used Vimeo Pro to upload and share the latest versions with timecode annotations. For instance, Andrea could see how I’d used an asset and then work on refining that certain angle.”

“I felt like James Cameron making Avatar. I treated it like a real-world camera with real-world lens decisions. I was able to review takes and shots instantly."

Virtual camera work

A large part of the film takes place in a war zone, for which Dulull wanted a visceral, raw, handheld action vibe to the camera work.

“I soon realized that trying to keyframe that level of animation would have been too slow and unproductive.”

He turned to DragonFly, a virtual camera plugin for Unreal (also available for Unity 3D and Autodesk Maya) built by Glassbox Technologies with input from Hollywood previz giants The Third Floor.

With the plugin, its companion iPad app, and Gamevice controllers, Dulull had a lightweight virtual camera in his hands.

“It was such a tight schedule that learning a new toolset was really tricky, but I wanted to use the camera for elements in the virtual cinematography I knew I couldn’t animate.”

After testing it at Epic’s lab in London, Dulull was able to use this in his own front room.

“I felt like James Cameron making Avatar. I treated it like a real-world camera with real-world lens decisions. I was able to review takes and shots instantly. As a filmmaker, this gave me the flexibility speed and power to design and test shoot ideas quickly and easily. Virtual production is a gamechanger. Now indie filmmakers can use the same tools used by the big film studios and you can do it in your own home.”

With each scene having a huge amount of assets, the team had to be smart to reduce the polygon count. “We set some rules. All big robots were in high-res as we would see them in closeups a lot, but we dialed the level of detail down on the buildings or backgrounds, especially when the camera was moving around fast. The great thing is you can control all that level of detail within Unreal.”

All the shots were captured at 2K resolution in Prores4444 Quicktime format, and the desert scenes were in EXRs. They were then taken into DaVinci Resolve for editing, color grading, and deliverables.

Workstation Power

Powering it all, Dulull used the latest Razer Blade 15 Studio Edition laptop PC. This comes with Nvidia Quadro RTX 5000 card on-board.

“The Razer Blade was constantly being pushed with most of the scenes being very demanding with the amount of geometry. FX and lighting including scenes that were 700 frames long,” he says. “I was able to pump out raytracing in realtime.”

Every single shot in the film is straight out of Unreal Engine. There’s no compositing or external post apart from a few text overlays and color correction done in editing on Blackmagic Design Resolve. That made good use of Nvidia’s GPU and utilized the Razer’s 4K display allowing him to make creative tweaks to each shot, lighting, and color right through to deliverables.

The score was also written in synchronicity with production, as opposed to being made weeks afterward in post. Dulull turned to noted composer Edward Patrick White (Gears Tactics) to write the music whilst production of the animation was happening.

“A lot of Edward’s musical ideas influenced my direction of the scene,” Dulull says. “That’s another unusual and creative way to work.”

Review and approval

He sent versions of the edit, which would have mostly first pass animation and lighting, to the publisher for their notes.

“Their notes were typically about story and dialogue, things that if we were doing this in a more conventional linear route, would entail time and expense to fix. But because we were in a realtime environment and we didn’t have pipeline steps, such as compositing or multipass rendering as you would get in conventional CG, we were able to make iterative changes really quick straight out of the engine.

“The ability to make these changes on the fly without making a difference to our budget and schedule is another huge game-changer. It could mean that many more story ideas get made because the risk to the producers and finance execs is so much smaller.”

Indie filmmaking goes virtual

With the cancelation of Comic-Con, TPub decided to release the short online exclusively with Razer. There is a TV series in development and it’s hoped Battlesuit can showcase not only TPub’s IP but also what is possible with this way of creating animated narrative content.

“Tools like Unreal Engine, DragonFly, Razer Blade, and Nvidia GPU reflect the exciting revolution of realtime filmmaking that we are all currently venturing into—where indie filmmakers with small teams can realize their ideas and cinematic dreams without the need for huge studio space or large teams to set up and operate,” Dulull enthuses.

“If someone had said I could pull off a project like this a few years ago that is of cinematic quality, all done in realtime, and powered on a laptop, I’d think they were crazy and over-ambitious. But today I can make an animated film in a mobile production environment without the need for huge desktop machines and expensive rendering.

“I can see a future where realtime filmmaking is going to unleash a wave of new and fresh stories that not only feel big but are full of imagination and ideas that were previously deemed too risky and expensive to do.”