How to Sync Audio for Modern Video Production Workflows

Syncing audio isn't as difficult as you may think.

In most modern video production workflows, audio and video are typically recorded separately, allowing for higher-quality audio and more flexibility in production and post. Any time they are recorded separately, they have to be synced in post-production, but that usually starts with workflows in production enabling the editor to do so.

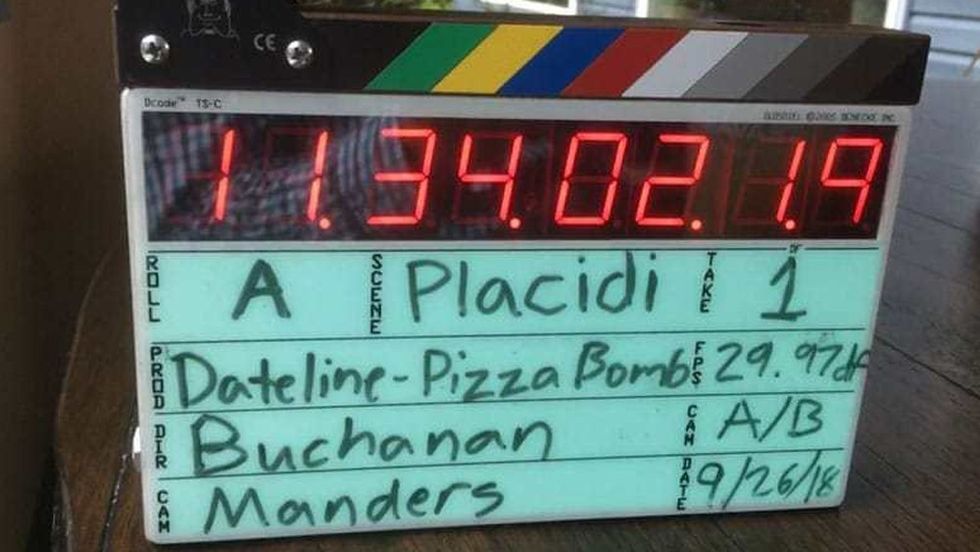

There are several ways to ensure everything can be synced in post, such as using a slate as a common sync point for both audio and video, or through the use of timecode metadata, matching audio waveforms, converting timecode recorded as audio, or visually burning in timecode via a timecode smart slate or timecode display.

Timecode is the preferred method of aligning audio and video in professional workflows, as it is much less labor-intensive than other methods and can save time and money in post-production. As the old saying goes, “One is None, and Two is One.” Redundancy is always the best practice.

When workflow and budget allow, it’s best to have timecode and genlock sync boxes on every camera, timecode, and wordclock on every audio recorder, a timecode smart slate providing two backup forms of alignment points, and an audio feed to the camera as a reference for the editor.

Ensuring Accurate Long Term Sync in Production

A common misconception is that timecode and sync are the same things. They are not. Timecode is only a metadata identifier.

Consider this common scenario that editors deal with regularly. On a production such as a comedy special, concert, or reality TV show, you have multiple cameras and separate sound recording gear that records without cutting for long periods. You align all your audio and video files at their start points in your NLE, but while it starts playing back in sync, they slowly lose synchronization, and by the end of the timeline, it’s drifted in either direction.

Even with timecode hardwired and matching starting points, how can they be noticeably out of sync by the end?

That’s because timecode doesn’t lock a camera or audio recorder’s clock to one another. It acts as a metadata reference point for how to stamp the first frame when you start recording. Once you hit "record" on any device, it relies on its own internal clock to govern frame rates and define what time is. Each device’s clock has small differences compared to one another, and over time, these differences add up and are experienced in the form of drift.

It might not be noticed on relatively short takes (like in the narrative world), but since it’s a cumulative effect, any take that lasts 30-40 minutes or longer could start to noticeably lose sync. To prevent this scenario from occurring, every clock on set needs to be slaved to one central master clock source generating Timecode, Genlock, and Wordclock for everything.

Since cameras don’t require as precise of clocks to count frames as audio recorders require to record audio, it is often a place where manufacturers cut corners in terms of accuracy. It should also be noted that lower-precision clocks can have the effect further compounded through environmental conditions such as temperature differences, especially under extreme conditions.

What is Timecode?

Timecode is a clock data protocol developed by the Society of Motion Picture & Television Engineers. It's carried over an audio signal using square wave pulses to represent bits and counting in frames (HH:MM:SS:FF), allowing clip start and stop times to be imprinted in the metadata on file-based recording systems like audio recorders and video cameras.

Timecode is the most important piece of metadata to modern video production workflows, allowing for easier alignment in post-production. As defined by the Society of Motion Picture & Television Engineers, SMPTE Timecode laid out a set of standards for synchronization in multi-system workflows consisting of multiple cameras and separate sound recording sharing a common clock.

There are a few variations of timecode, but LTC, or Linear Timecode set to the time of day, is what’s most commonly used today. Simply put, timecode is a clock signal that counts in frames allowing every recording device on set to share or match timecode values, which enables a much less labor-intensive workflow in post.

What is Genlock?

We discussed genlock before, but let's revisit. Genlock is a protocol using an analog signal for synchronizing video sources, such as cameras or VTRs. It actually synchronizes the camera’s clock source to an external source dictating when each frame is taken.

Historically used in live broadcast, genlock used black burst to achieve this, but with the advent of HD Video, it has shifted to using Tri-Level Sync. It was also traditionally used in live broadcast switching applications to prevent artifacts and enable lower latency video switching. It also has applications in modern video production workflows for preventing camera drift.

Genlock prevents drift over the course of recording by replacing the internal clocking with an external source that is beating right along perfectly locked to the rate of timecode from the master clock.

What is Wordclock?

Wordclock, while commonly used to keep multiple stages of digital and analog conversion in sync in the studio world, can have advantages when implemented to enhance the stability of audio-visual sync when used in conjunction with timecode and genlock.

Although audio recorders require much more accurate clocks to work than video sources, timecode is still only metadata imprinted on the files the recorder generates. Once you start recording, it can begin to drift on extremely long takes.

Wordclock can sync multiple audio sources at the sample rate level. This is an essential part of workflows with multiple audio recorders being used alongside one another to increase channel counts.

Some tech with wordclock functionality comes from Ambient and their MasterLockit devices.

What are Userbits?

Embedded in linear timecode there are additional bit slots known as userbits. These, as the name suggests, are user-settable and do not automatically advance.

On most devices, these user-definable bits can be provided via external LTC or manually set. Like timecode, there are 8 Ubit slots (for example, 12:34:56:78), that can be set to any character 0-9 or A-F, making them especially useful for additional metadata. Commonly used for displaying metadata such as Date, Roll Number, Camera Letter, or Scene Number, its uses can be quite versatile.

Frame Rates

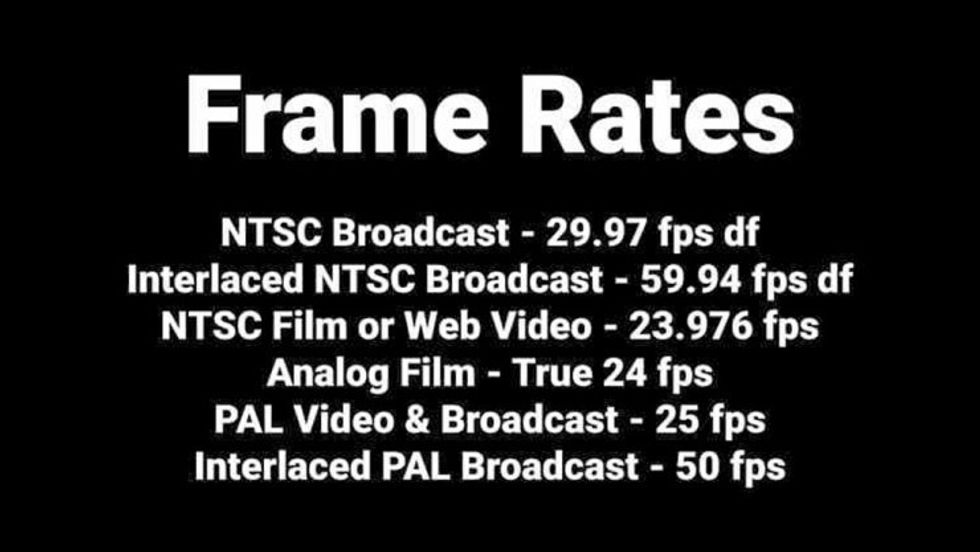

Frame rates are divided into two standards based on region, NTSC and PAL.

NTSC is predominately used in North America, Japan, Central America, and a portion of South America, while PAL is predominantly in Europe and the rest of the world or regions that have AC power at 50Hz.

While NTSC and PAL are largely outdated standards for color television broadcasts, the frame rates for each standard have largely remained mostly unchanged and therefore still relevant because of backward compatibility.

To transition from black-and-white broadcasting to color, it required the slowing of frame rates by 0.1%, which is why we now have 29.97 instead of 30, and 23.976 instead of 24. For NTSC regions, the most common frame rates are 29.97 or 23.976, and PAL at 25 FPS.

Since video cannot have partial frames, drop frame timecode formats drop frames in the counting to prevent video time and real-time from losing sync. It should be noted that no actual frames in the video are dropped, but the numerical representation is when counting individual frames to correct for drift.

To add more confusion to frame rates, it’s not uncommon for frame rates to be referred to incorrectly by their closest whole number. (For example, people incorrectly calling 23.976fps 24fps, or 29.97fps 30fps.) Knowing what’s commonly used in various scenarios can help avoid confusion, but it never hurts to ask questions about the correct frame rate for that specific production and its final destination.

Someone from post-production ideally should be involved in this discussion, but when not available, the director of photography or producer should have the answers.

Audio Timecode Workflow for Mirrorless/DSLR Cameras

When a camera doesn't have a timecode input (and definitely not genlock), you can still benefit from the implementation of timecode over audio.

Due to timecode’s linear nature carried over a square wave, it can be easily recorded to an audio channel and be decoded into metadata by software in post-production.

Just note that timecode signals can be quite hot, so attenuating the signal and lowering the gain on the camera will likely be needed to prevent the signal from clipping. Then using software such as Tentacle Sync Studio or DaVinci Resolve, you can replace the timecode metadata on the video files based on timecode recorded to an embedded audio channel.

That’s really as simple as it is.

Timecode for Audio Playback

Music videos, commercials, and narrative films often require music playback, but playback can also take advantage of timecode for easier sync. Using a timecode generator such as El-Tee-See, you can generate linear timecode with specific start times and runtimes.

This can then be edited into a WAV file that plays a mono summed version of the music on channel 1 and timecode on channel 2. Then using an interface attached to a PA system and wireless transmitter, with the receiver on a smart slate in read mode, it’s ready to go. Make sure there is plenty of timecode preroll to ensure there is time to get the slate in and out before the music starts. I also use a 2-pop lasting 1 frame, exactly 2 seconds before the start time, and lower volume cueing music to help the musicians anticipate timing coming in.

Summary

With some basic knowledge, syncing video and audio can be achieved for just about any application. If you take on a project that requires longer takes, considering using genlock as well as timecode workflows. If you have multiple audio recorders and cameras, wordclock is the way to go. Plus, it never hurts to have redundancy in your system.

Have any tips about syncing audio to video on productions? Share it with readers in the comments below.

For more, see our ongoing coverage of Sound Week 2020.

No Film School's podcast and editorial coverage of the Sound Week 2020 is sponsored by RØDE.