Virtual Production Is Here, and You Can Learn All About It

Become an expert on virtual productions before you even arrive on set.

This is the first article in a four-part series covering virtual production. In this first part, we are going to introduce some basic types of virtual production. The definitions used to describe VP terms in this series are moving targets in a rapidly evolving set of tools used in film production, so they may change with time. This is where virtual production currently fits within the filmmaking industry.

Virtual production is poised to change the way we make movies. This isn’t news anymore. It is something people like me are actively working to make a reality. Why?

- It lowers the barriers to entry for emerging filmmakers

- It gives complete creative control over the environments and world of the story

- It is a highly cost-effective solution to the logistics of shooting on location

But before we actually dive into the ways we use it on and off set, let's set our framing—what is virtual production best used for?

Actually, let’s start with the more obvious question first—what the heck is virtual production? It’s a catch-all term that actually refers to a lot of different things, but for the intents and purposes of this series of articles, here is what I am referring to when I use the term virtual production:

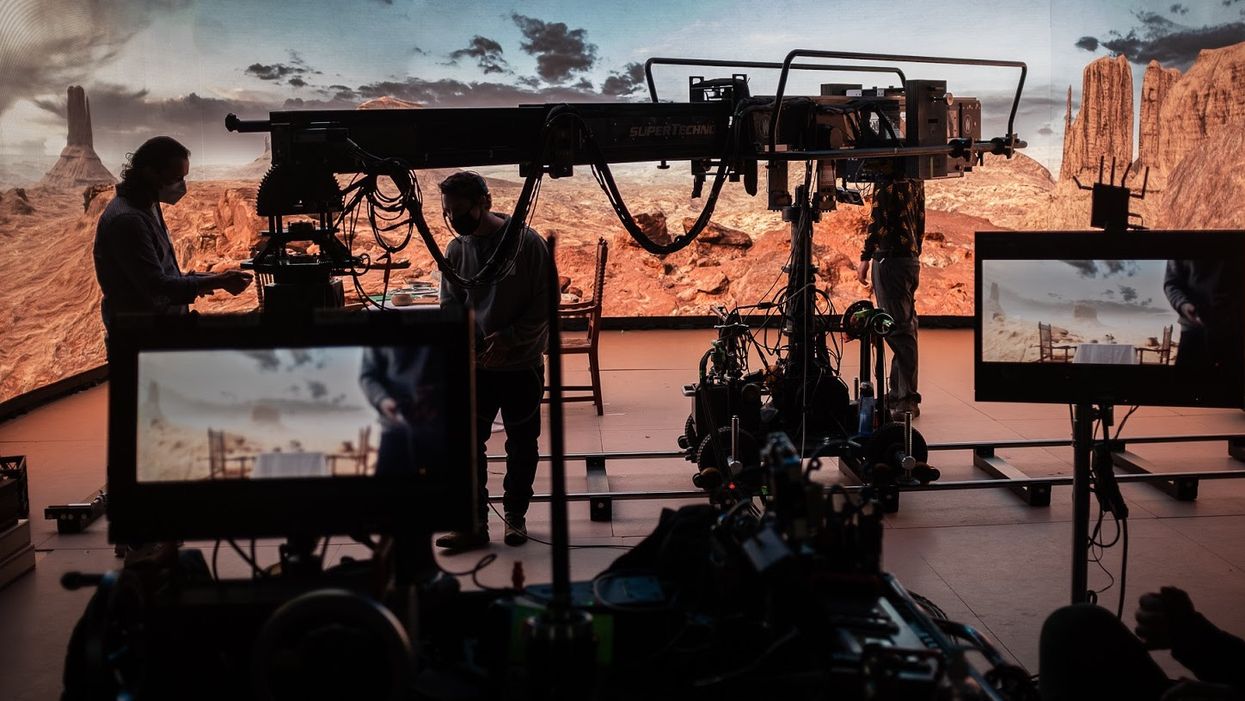

Using LED volume stages for film and commercial productions are becoming more popular following the critical success of Disney’s The Mandalorian, lensed by Grieg Fraser.

For those who don’t know what I’m talking about , we want to give you a brief introduction to shooting virtual productions and what to expect. Let's dive in!

Motion tracking and capture

While some form of mocap has been around in film and gaming for a long time, using the tools available to capture the motion of an object, actor, or camera is a big part of what makes virtual production so exciting. It’s the fact that real human-like motion can be encoded in real-time into a 3D graphics engine to do a huge number of things, including:

- Capture human movement for an animated character

- Capture the movement of a prop or set piece to render onto a complex animation

- Capture the movement of a camera in 3D space so the background of the set can be changed while the camera movement and all the visual distortions of that movement are maintained

Previsualization and scouting

Previs and scouting are two separate things, but in essence, they use the same tools in the context of virtual production. They both utilize a real-time game engine such as Unreal Engine or Unity to achieve their desired results.

Virtual scouting

Virtual scouting is the act of bringing a real-world environment (or one created from scratch) into a game engine and exploring it remotely with your team of creatives to decide if that location works for your production.

Inside a game engine, you can mimic different real-world lighting styles and solve some of the most complex logistical challenges without ever setting foot on-site. This, obviously, can save you the immense costs of travel—flights, meals, hotels, transport, security, etc.—required to take a whole team of creatives to a distant location.

If the location doesn’t yet exist, it can be built from scratch in a studio. Exploring the set virtually beforehand and blocking out what scenes will take place there will help ensure the set designer and construction team build it only once for everything that the production needs, avoiding costly rebuilds or modifications once the set is being reviewed for shooting.

Previsualization

Previsualization takes virtual scouting and brings it to the next level, where your intention is to shoot, edit, and export entire sequences or scenes of a film before it’s even time to roll a physical camera. This helps you determine what creative choices to make on set on the day to avoid reshoots or costly delays.

While this technique is not new to the world of film and television, the act of creating previsualization in real-time within a game engine makes the process more inclusive for different members of the creative team who aren’t as 3D-software literate, ensuring that everyone can sign-off on the final look before it has to be actualized on set.

LED volumetric filmmaking

Finally, we have LED-driven volumetric filmmaking. This is what the world is excited about right now due to the commercial and critical success of Disney’sThe Mandalorian, which is pioneering a method of using this type of real-time capture drive by the Unreal game engine to make scenes captured on-set in not-so-real environments more believable for the audience.

Let’s break down what this involves.

I’m going to avoid being overly technical about this—though there is a very deep rabbit hole we could dive into, I’ll let you do your own research about the tech at play here if you are interested.

The LEDs

On the hardware side, the most technical part of this process is doing real-time "final pixel" rear-projection for your scene using LED panels as the background source for that image.

While there are many different types of panels on the market and all have their benefits and drawbacks, RoE Black Pearl 2s are the ones I’ve used the most and were the ones used in the filming of The Mandalorian—so for lack of an official "standard," I would say these are near the top of the list.

The common pipeline starts with the image being manipulated within the VP workstation, which is then rendered at the necessary resolution by a dedicated rendering computer, then is sent to a switcher which sends the signal to the onboard color processor, and then finally to the panels themselves to display the image.

The engine

This is the part where things get really exciting. We are using video game engines to render "final pixel" quality 3D environments for real-time playback onto these LED screens. The environments used are often designed in these engines, and this is where the previs, scouting, and testing all take place.

This is the element that holds it all together, and due to recent advancements in prosumer-grade GPU technology, this tool is becoming accessible to any filmmaker dedicated to learning it.

Both Unreal and Unity have toolkits for virtual production, and both seem really keen to make it tech-free and accessible to anyone looking to use it for film and TV.

I personally have used both game engines and prefer Unreal for virtual production purposes because of its expansive community of developers and plug-in support—but there is so much written on the Unity vs. Unreal debate, I’ll let you decide on your own.

Try them both—they are free to try and learn from, and both boast amazing communities and libraries of how-to videos that can help you get started.

The tracking

So what actually makes this "volumetric"?

It’s the fact that our camera and sometimes props and actors are tracked in 3D space in real-time, then their place within the stage is processed in the game engine and placed into the 3D environment. Then, the matching field of view and parallax is displayed on the LED screen in the background for your camera position, even if you are doing complex handheld and crane movements.

There are many ways to track an object in 3D space, which I’ll describe in more detail in another article.

How does it all work?

So, quick overview: you stand on a stage with a camera, filming your scene. The camera is tracked in volumetric space within a game engine, which then takes that information and places you in a 3D environment, filling in the background of your shot using LED screens that your stage is built around.

Not only does this create the illusion that you could be standing on the Cliffs of Dover or the tundras of Mars, but it also gives you a way to emulate the lighting of that environment using the LED screens as active sources, something you would have to solve for if you were using green screen instead.

Final thoughts

There is a lot more to dive into here, so if you are curious about the world of virtual production, I have included some links below of videos to watch and articles to read that cover it in more depth.

This is also the first in a series of four articles I’ve written to help traditional filmmakers understand more about the world of VP and how gaming and film are converging. In the next article, I’ll be answering the question, "What is the difference between virtual production and traditional production?"

Learn more about virtual production

Here are just a few resources you can check out to get even deeper into virtual production and Unreal. Give them a look!

Long—Written

- The Virtual Production Field Guide, Noah Kadner, Basic to intermediate knowledge

Long—Video

- Live Panel on Virtual Production—Canadian Society of Cinematographers, 100 mins, Intermediate knowledge

- Intersection between Camera, VFX, and Lighting for Virtual Production—Visual Effects Society, 120 mins, Intermediate to advanced knowledge

Short—Video

- Project Spotlight: UE4’s Next-Gen Virtual Production Tools—The Unreal Engine, 12mins, Basic knowledge

- Virtual Production - A cinematographer’s conversation—FX Guide, 28mins, Basic knowledge

Short—Audio

- Virtual Production—Podcast by Noah Kadner, 15 mins per episode, Basic to intermediate knowledge

Long—Audio

- Virtual Production—SIGGRAPH Spotlight Podcast, 45 mins, Intermediate knowledge

Other

- Virtual Production Resources—Visual Effects Society

- Unreal Build: Virtual Production Conference 2020—The Unreal Engine

- Unreal Online Learning—The Unreal Engine

Groups

- Virtual Production Facebook Group—13k global members

- Unreal Engine: Virtual Production Facebook Group—40k global members

What's next? Continue your learning

Things are different for cinematographers who shoot on volumetric stages, and there are new tools at your disposal. Learn what cinematographers need to know going into a virtual production. Then read about the differences between virtual production and traditional production.

Special thanks to the Director’s Guild of Canada for the use of the photos in this article from our amazing shoot in February of 2021.

Karl Janisse is a Canadian cinematographer, photographer, and visual storyteller currently residing in Vancouver, BC, who specializes in virtual production cinematography and workflow design. He believes great images make the heart bigger and the world smaller. Karl also co-founded an online school Pocket Film School that helps bring education about the film industry to people around the world.