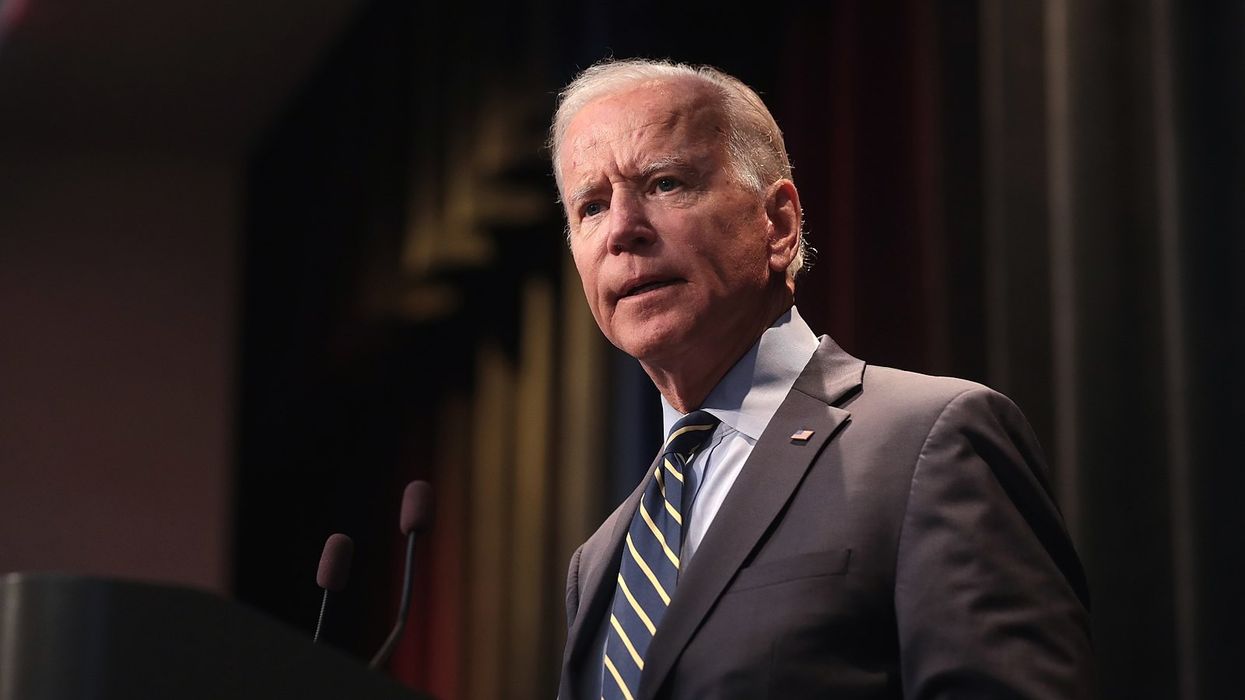

How Is President Biden Influencing the Future of AI Video Content?

Let's take a look at how a new executive order outlines the federal government’s first regulations on AI systems, and what that could mean for the future of AI video content.

President Biden's AI Executive Order

In a non-binding agreement between the White House and several of the major players in artificial intelligence technology (including Meta, Google, OpenAI, Nvidia, and Adobe), President Biden has signed the federal government’s first regulations on AI systems.

This new executive order, when combined with a new AI Bill of Rights announced earlier this year, is the latest effort by the Biden administration aimed at establishing a more formal blueprint to develop a way to govern both current AI models and systems, as well as curtail future AI developments and potential concerns.

Let’s take a look at what this new executive order promises to regulate for AI today and in the future, in particular, how it pertains to AI-generated video.

President Biden’s AI Executive Order

From an official statement from the White House briefing room, President Biden has issued an “Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence,” which is set to “ensure that America leads the way in seizing the promise and managing the risks of artificial intelligence (AI).”

This executive order sets new standards for AI safety and security, as well as protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances American leadership around the world, and more.

The executive order is quite wide-reaching in topic, but does indeed provide the blueprint for a comprehensive strategy on responsible innovation, building on the voluntary commitments from 15 leading companies aimed at driving safe, secure, and trustworthy development of AI.

Technical Mechanisms for Watermarking AI

Perhaps the most important development to come from this executive order and previous efforts by the White House to work with AI tech leaders is a promise to ensure that companies will develop technical mechanisms for watermarking AI-generated materials in the future so that audiences will always be able to know that what they’re looking at is not real.

Again, this might be a bit tricky to implement in the real world.

As we’ve seen with new technological developments such as cameras with built-in encrypted metadata, the battle for ensuring asset and creative ownership is coming to the forefront of the national discussion.

For video in particular this would be quite difficult as well, but highly important. We’ve already seen AI technology move into the film and video space. Chances are you’ve watched AI-generated content in movies, television, and advertisements at this point.

While only voluntary at this point, several of the AI tech companies from these conversations have agreed to share information across government, academia, and civil society as a way to curtail the risks of AI as well as help with the development of future systems to make watermarking or other IP issues more easy and transparent.

The Future of AI Video

Still, at the heart of this ongoing discussion regarding AI-generated video, there are more questions than answers. This latest executive order should be a small step in the right direction to signify that the federal government is aware of industry and personal concerns and is actively working with industry leaders to develop means to solve these current and future issues.

However, for video in particular, content creators should note that this executive order, or any other federal action, will not recall any available models already out there.

If you’re indeed interested in exploring AI for generative or editing (or color grading) processes, those tools are still out there with likely more in development and on the way.

However, the executive order is not a permanent law and usually only lasts the length of the administration’s term. So while these executive orders might be a step in the right direction, the federal government’s involvement is subject to change in the future as well.

- Why Did Marvel Use AI To Create the Intro for ‘Secret Invasion’? ›

- You Will Be Impacted by AI Writing... Here Is How ›

- Meta Introducing Its Own Generative AI with Emu Video and Emu Edit ›

- Will Google’s New AI Generator ‘Lumiere’ Finally Break the Industry Open? ›

- OpenAI’s Insanely Powerful Text-to-Video Model ‘Sora’ is Here ›